Let’s be clear: “bad” graphics are subjective. But if a game looks noticeably worse than its contemporaries, several factors are usually at play.

Technical Limitations: This isn’t always about the hardware. Poor engine optimization, inefficient coding, or using outdated technology can significantly impact visuals, regardless of the system’s capabilities. Think of it like a Formula 1 car with flat tires – it’s got the potential for speed, but the execution is flawed.

Developer Inexperience: A team lacking experience in a specific engine, or simply lacking skilled artists and programmers, can result in visually underwhelming games. It’s like trying to bake a soufflé without knowing the proper techniques – the result might be edible, but far from impressive.

Budget Constraints: Developing high-fidelity graphics is expensive. It requires investing in powerful hardware for rendering, high-resolution textures, and skilled artists for modeling and animation. A limited budget often leads to compromises on visual quality, forcing developers to prioritize other aspects of the game.

Time Constraints: Pushing a game out the door quickly means cutting corners. Visual fidelity is often one of the first things to suffer under tight deadlines. This often results in reused assets, lower polygon counts, and simplified textures.

Artistic Style Choices: Sometimes, a “low-poly” or stylized look is a deliberate artistic choice, not a result of technical limitations. Think of games like Minecraft or Cuphead; their simplistic graphics are a key part of their charm and appeal.

Platform Limitations: A game designed for a less powerful platform (e.g., mobile) might inherently have lower graphical fidelity compared to a PC or console title. This isn’t necessarily a sign of poor development; it’s simply adapting to the target hardware.

- Key takeaway: Judging a game’s graphics solely on “good” or “bad” is oversimplistic. Consider the context: budget, target platform, artistic vision, and development time all play significant roles.

Which graphics settings put the most strain on the CPU?

Yo, what’s up, gamers! Let’s talk CPU load in games. It’s not just about your GPU, you know. The CPU is a major player, especially in modern titles.

Here are the graphics settings that hammer your CPU the hardest:

- Resolution: Higher resolutions mean more pixels the CPU needs to process before sending to the GPU. This is a BIG one.

- Shadows: High-quality shadows, especially those with ray tracing, are *brutal* on the CPU. Think detailed shadow maps and cascading shadow maps – CPU intensive beasts.

- Level of Detail (LOD): This controls how much detail is rendered for objects at different distances. Higher LOD means more calculations for the CPU to handle, especially if you’re in a busy area.

- Anisotropic Filtering (AF): While primarily a GPU task, higher AF settings can still impact the CPU, particularly with older or less powerful CPUs.

- Post-Processing Effects: Bloom, motion blur, depth of field… These effects are computationally expensive and put a real strain on the CPU, especially when combined.

- Shadow Range/Draw Distance: Longer shadow and draw distances increase the number of objects the CPU needs to process, leading to higher CPU usage. Think about all those things needing to cast shadows and be rendered miles away!

- Crowd Density/NPC Count: More characters on screen means exponentially more CPU work to manage AI, physics, and animations. Massive battles? Prepare for CPU meltdown!

Pro-tip: Don’t just blindly crank everything to max. Experiment! Find the sweet spot between visual fidelity and performance. Use in-game benchmarks or external tools to monitor CPU usage and adjust settings accordingly. And remember, a good CPU overclock (if your cooler can handle it!) can make a difference.

Another pro-tip: Consider using a more efficient rendering API like Vulkan or DirectX 12, which can sometimes offload more tasks to your GPU, freeing up CPU resources. It’s worth checking if your game supports them.

What influences the graphics in games?

The statement is partially correct but vastly oversimplified. While monitor resolution directly impacts the workload on the GPU – a 1920×1080 (Full HD) resolution indeed requires rendering 2,073,600 pixels per frame – it’s only one piece of a much larger puzzle affecting in-game visuals. Monitor refresh rate (e.g., 60Hz, 144Hz, 240Hz) determines how many frames per second (FPS) the display *can* show, not how many the GPU *generates*. A high refresh rate monitor won’t magically boost a low FPS, but it will allow for smoother motion if the GPU *can* achieve a frame rate higher than the refresh rate.

Beyond the monitor, countless other factors significantly influence game graphics. GPU power is paramount; a more powerful GPU can render more complex scenes at higher resolutions and frame rates. CPU performance also plays a crucial role, especially in highly CPU-bound games. Game settings, such as texture quality, shadow resolution, anti-aliasing, and level of detail (LOD), drastically affect visual fidelity and performance. Higher settings generally mean better visuals but lower FPS. Game engine optimization is critical; well-optimized games will run better than poorly optimized ones on the same hardware. Finally, driver versions for both your GPU and the game itself can significantly impact performance and stability. Ignoring these aspects leads to a misdiagnosis of performance bottlenecks. Focusing solely on resolution and refresh rate without understanding the interplay of these other elements will severely limit your ability to optimize your gaming experience.

Why do people with ADHD enjoy video games?

The appeal of video games for individuals with ADHD stems from their inherent design: constant shifts in focus, immediate gratification, and frequent rewards. Unlike many real-world tasks requiring sustained attention and delayed gratification, games offer a rapid-fire succession of stimuli and instant feedback loops. This aligns perfectly with the neurological characteristics of ADHD, making these experiences intensely engaging and rewarding in a way that often contrasts sharply with the monotony and delayed rewards experienced in other aspects of life.

This isn’t to say that all video games are equally engaging for someone with ADHD. Games with repetitive, slow-paced gameplay may still prove frustrating. The key is the dynamic nature of the experience. The constant need for quick decision-making, problem-solving, and adaptation—all common features in action, strategy, and many role-playing games—can provide a stimulating and satisfying counterpoint to the challenges of managing ADHD symptoms in daily life. The dopamine rush associated with achieving in-game goals further reinforces this positive feedback loop. This explains why games often feel more “alive” and engaging to those with ADHD than the comparatively slower paced world outside the screen.

It’s important to note that while video games can provide a positive outlet, they aren’t a cure or replacement for proper diagnosis and treatment. Excessive gaming can still lead to negative consequences. The key lies in moderation and identifying gaming patterns that foster healthy engagement rather than becoming detrimental habits.

Understanding this dynamic allows educators and parents to utilize video games as a potential tool for enhancing engagement and fostering specific skills. For example, strategic games can help build planning and problem-solving abilities, while action games might improve reaction time and hand-eye coordination. However, responsible usage must be emphasized, considering potential downsides of excessive screen time.

Which graphics settings have the biggest impact on FPS?

Alright rookie, listen up. Want more FPS? Don’t waste time tweaking shadows or minor details. Start with the big guns: Anti-aliasing, texture and object quality, draw distance, lighting, and post-processing. These are the real FPS hogs. Lowering them first will usually give you the biggest performance boost.

Now, here’s the pro-tip: Anti-aliasing is often a huge culprit. Try turning it down or switching to a less demanding method like FXAA instead of MSAA. It makes a massive difference visually too, believe it or not, often smoothing out jagged edges just enough without killing your frame rate.

Texture quality is next. High-resolution textures look great, but they’re memory-intensive. Lowering this setting will free up valuable VRAM. Don’t go too low though; you want things to still look recognizable.

Draw distance controls how far you can see. Crank it down to improve performance, especially in open-world games. You’ll sacrifice some view distance, but you’ll gain frames that are worth the trade-off. Consider it a tactical decision.

Lighting and post-processing effects are often highly demanding. Reducing the quality here, particularly shadow detail and bloom, can drastically impact performance. Think of it as cutting out the fancy filters.

Experiment with different settings. Don’t just blindly crank everything to low. Find the sweet spot where the game looks good enough and performs well. Remember, a smoother experience is more enjoyable than eye candy that drops your framerate.

Why do realistic games still look fake?

That uncanny valley feeling in realistic games? It’s because your brain is a super-powered detail detector, even if you’re not consciously aware of it. Subconsciously, you’re picking up on inconsistencies: lighting that’s just slightly off, textures that lack the subtle imperfections of real-world surfaces, animations that don’t quite capture the fluidity of natural movement. These discrepancies, even minute ones, trigger a disconnect. Think of it like this: the game nails the high-level representation—a human face, a flowing dress—but misses the micro-details that make it truly believable. These micro-details include things like subsurface scattering in skin (how light penetrates and reflects beneath the surface), accurate material responses to light (the way different materials reflect and refract light), and physically plausible simulations of hair and cloth physics. The more these details are improved, the closer the game gets to bridging that valley, even if perfect realism is ultimately impossible.

Game developers are constantly pushing boundaries, striving for better global illumination techniques, more sophisticated shader technology, and advanced physics engines. Despite these advancements, the human brain remains remarkably adept at identifying even the slightest deviation from what it expects to see, making the pursuit of true realism a continuously evolving challenge. The “uncanny valley” is a testament to the complexity of human perception and the intricate detail required to truly fool the eye.

What game is beneficial for ADHD?

For individuals with ADHD, games incorporating visual timers are incredibly beneficial. These provide a tangible “light at the end of the tunnel,” mitigating boredom and offering a clear sense of progression. The satisfaction of beating the clock becomes a powerful motivator, fostering focus and task completion. Many ADHD-friendly games cleverly integrate reward systems, allowing players to earn points redeemable for in-game prizes or even tangible rewards in the real world. This gamified reward system taps into the brain’s reward pathways, boosting engagement and encouraging sustained attention. The key is to find games with appropriately paced challenges; neither too easy to be boring nor too difficult to be overwhelming. Consider games emphasizing strategy and planning, rather than solely relying on fast reflexes, as these require sustained mental engagement.

Furthermore, the social aspect of gaming should not be overlooked. Cooperative games, especially those requiring teamwork and communication, can help individuals with ADHD develop crucial social skills and learn to collaborate effectively. The structured nature of many games can also act as a helpful framework, providing a sense of order and predictability that can be soothing and beneficial for managing ADHD symptoms. Remember to choose games that cater to the individual’s specific interests and preferences, ensuring optimal engagement and enjoyment. The best games will not only be fun but will also subtly incorporate elements that cultivate focus, planning, and problem-solving skills.

Beyond simple timers, consider games with clear rules and readily available feedback. This allows for immediate understanding of progress and success, preventing frustration and maintaining motivation. Games that break down complex tasks into smaller, manageable steps are also ideal. Finally, avoid games that rely heavily on impulsive reactions or those with overwhelming stimuli. A well-chosen game can be a valuable tool in managing ADHD, offering a fun and engaging way to improve focus and executive function.

What is the most realistic game in the world?

There’s no single “most realistic” game; realism is subjective and depends on what aspects you prioritize. However, certain titles excel in specific areas.

Graphics and Simulation:

- Microsoft Flight Simulator: Unmatched in its detailed rendering of the planet and flight physics. Excellent for those seeking realistic flight simulation, but gameplay can be quite niche.

- Gran Turismo series: Longstanding king of racing simulation, renowned for its accurate car handling and physics. Focus is on driving, not necessarily overall environmental realism.

- Elite Dangerous: Massive scale space exploration with incredible visual fidelity and complex simulation of space travel. Steep learning curve.

- Farming Simulator series: Surprisingly realistic in its depiction of agricultural tasks and machinery. Niche but incredibly detailed in its own right.

Narrative and Character Realism:

- The Last of Us Part II: While graphically impressive, its strength lies in incredibly nuanced character development and a gripping, emotionally resonant narrative. The realism is in its storytelling, not necessarily its physics engine.

- Cyberpunk 2077: (Note: Launch issues aside) Boasts a richly detailed and believable open world, albeit with a stylized aesthetic. Character interactions and story are key elements of its realism, although often controversial in execution.

- Arma 3: Military simulation with exceptionally realistic ballistics, weapon handling, and AI. High skill ceiling and often unforgiving.

Key Considerations: Defining “realism” requires specifying what aspects you value. Do you want photorealistic graphics? Accurate physics simulation? Believable characters and stories? Different games excel in different areas.

Pro Tip for PvP veterans: Remember, “realism” in a game often clashes with the need for engaging gameplay. The most realistic simulation might be incredibly boring. The best games often strike a balance between realism and fun.

What’s better, FPS or graphics?

Forget the “graphics vs. FPS” debate; it’s a false dichotomy. High FPS is crucial for competitive play. Anything under 60fps is unacceptable – you’re reacting to a ghost image, giving opponents a massive advantage. Smooth, consistent 144hz or even higher is the realm of true masters. Graphics? They matter, sure, but only to the extent they don’t cripple your performance. High settings that tank your FPS are a handicap. Prioritize frame rate above all else in PvP; reaction time wins fights, not pretty shaders. Certain genres, like fast-paced shooters, demand significantly higher FPS than, say, an RPG. The difference between 60fps and 144fps in a competitive arena is like fighting with one hand tied behind your back. In PvP, frame rate is king; learn to optimize your settings for performance over visual fidelity.

How many FPS are needed for comfortable gameplay?

Alright gamers, let’s talk FPS. Forget minimum and maximum; average FPS is king. That’s the number that truly matters for a smooth gaming experience.

The sweet spot for most games? Aim for at least 60 FPS. 30 FPS is playable, sure, but it’s noticeably choppy. 60 FPS is a massive improvement, making the game feel much more responsive and fluid. You’ll notice less input lag, smoother animations, and overall better gameplay.

Here’s the breakdown:

- 30 FPS: Barely playable. Acceptable for older titles or less demanding games, but expect noticeable stuttering and lag.

- 60 FPS: The sweet spot for most gamers. Smooth and responsive gameplay.

- 120 FPS (and above): Competitive gaming territory. A significant upgrade from 60 FPS, leading to extremely smooth gameplay with minimal input lag. This is ideal for fast-paced games where precise aiming and reaction time are crucial. You’ll also see benefits in smoother motion blur.

Keep in mind: Different games have different requirements. A visually demanding AAA title will require more FPS than an indie game. Also, your personal preference plays a role. Some people are perfectly content at 60 FPS, while others crave the smoothness of higher frame rates.

Ultimately, experiment and find what feels right for you. But if you want a consistently smooth and enjoyable gaming experience, aiming for at least 60 FPS is a great goal.

What’s causing the FPS drops in games?

FPS drops? Let’s be real, it’s usually a clusterf* of things, not just one setting. High graphics settings? Yeah, that’s obvious. More polygons, better textures, fancy shaders – it all screams for more GPU power. But it’s not just about maxing out everything.

Here’s the lowdown, from someone who’s seen it all:

- Shadows: Those fancy ray-traced shadows? Beautiful, but a GPU killer. Cascaded shadow maps are better for performance but can look blocky.

- Lighting: Global Illumination? Gorgeous, but performance intensive. Screen Space Reflections? Resource hog. Know your enemy.

- Textures: High-resolution textures are glorious, but they eat VRAM like candy. Check your texture settings – you might be surprised at the impact.

- Anti-aliasing (AA): Smooths edges, but it’s computationally expensive. TAA (Temporal AA) often strikes a decent balance between quality and performance, while MSAA is a brute-force approach.

- Draw distance: Seeing farther means rendering more stuff. Tweaking this can have a significant impact.

- Post-processing effects: Depth of field, bloom, motion blur – they all add visual flair but are performance-hungry. Experiment with disabling them.

- CPU Bottleneck: Your GPU might be screaming for help, but the CPU could be the weak link. Check your CPU usage during gameplay. If it’s pegged at 100%, upgrading your CPU might be necessary, even if your GPU is top-of-the-line.

- Driver Issues: Outdated or buggy drivers can tank your FPS. Keep your drivers updated – and roll back to older versions if a new driver causes problems.

- Background Processes: Close unnecessary applications and browser tabs. Those resource hogs will sneak up on you.

- Overclocking (Careful!): A little overclocking can provide a performance boost, but be cautious and monitor your temperatures. A fried component is a bad time.

Don’t just blindly max everything. Experiment! Find the sweet spot between visual fidelity and playable framerate. Use in-game benchmarks or external tools to see what affects performance most in *your* setup.

Why is my CPU at 70% utilization?

70% CPU usage is a significant load, potentially impacting gameplay performance. This isn’t necessarily a critical error, but it warrants investigation. High CPU usage often translates to lag, stuttering, and inconsistent frame rates – all detrimental in competitive gaming.

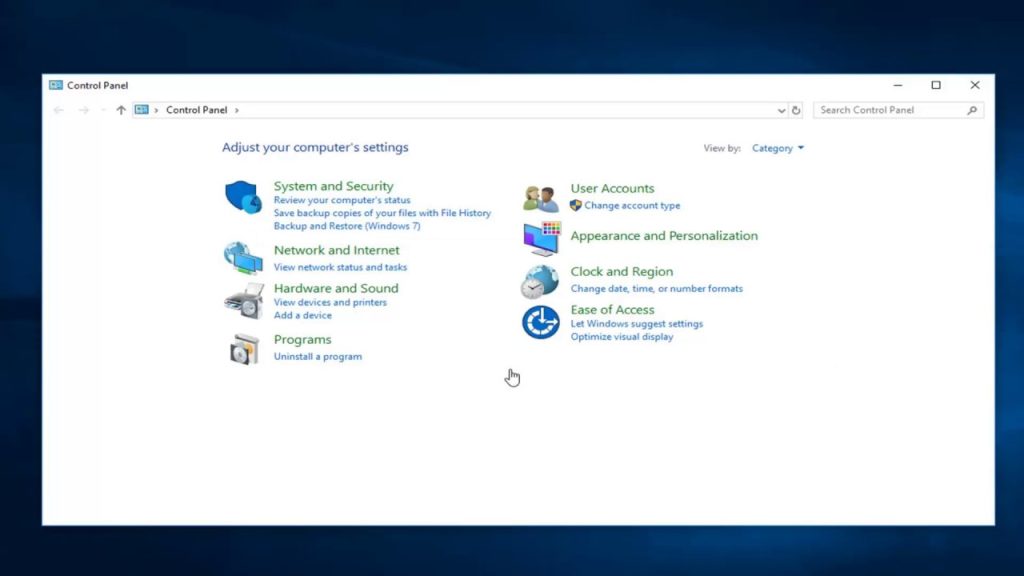

Identifying the Culprit: The first step is using Task Manager (or a similar resource monitor). The “Processes” or “Details” tab reveals which applications are consuming the most CPU resources. Look for anything unexpected; a game should be the primary user, with background processes consuming minimal resources.

- Resource-Intensive Games: Some games are simply more demanding than others. If the high usage is solely from your game, consider lowering graphics settings (resolution, shadows, textures, etc.) to reduce the load.

- Background Processes: Streaming software (OBS, XSplit), overlay apps (Discord, MSI Afterburner), anti-virus software, and even Windows updates can consume substantial CPU power. Close unnecessary applications.

- Malware/Viruses: A less common, yet critical, cause is malware. A thorough scan with your anti-virus is crucial.

- Overclocking Issues: If you’ve overclocked your CPU, instability can lead to higher usage as the CPU struggles to maintain the overclocked clock speed. Check your overclock settings.

- Driver Issues: Outdated or corrupted graphics drivers are a frequent culprit. Update your graphics card drivers (and chipset drivers) to the latest versions from the manufacturer’s website (Nvidia, AMD, Intel).

Advanced Troubleshooting:

- Monitor CPU Temperature: Excessive CPU temperature can cause throttling, resulting in higher usage to compensate. Use monitoring software (like HWMonitor or MSI Afterburner) to check your CPU temperature under load.

- Check for Bottlenecks: A CPU bottleneck occurs when other components (RAM, storage, GPU) cannot keep up with the CPU’s processing power. Consider upgrading your system’s components if this is a recurring issue across multiple games.

- Windows Updates: Ensure your operating system is up-to-date. New updates often include performance improvements and bug fixes.

Pro Tip: Regularly cleaning your system of unnecessary files and programs can improve overall performance. Defragmenting your hard drive (if applicable) can also make a difference. For SSDs, focus on maintaining adequate free space.

Why do people with ADHD achieve success?

ADHD in esports is a double-edged sword. While often perceived as a hindrance, certain ADHD traits can be surprisingly advantageous, leading to success in competitive gaming.

Hyperfocus: This ability to intensely concentrate on a single task for extended periods, often ignoring distractions, is crucial in high-stakes esports matches. When channeled correctly, hyperfocus can enable players to achieve peak performance during crucial moments.

Creativity and Innovation: The impulsive, out-of-the-box thinking associated with ADHD can lead to unique strategies and approaches. This unconventional thinking can disrupt opponents and create surprising advantages, especially in strategy-based games.

Resilience and Adaptability: The challenges faced by individuals with ADHD often foster resilience and the ability to bounce back from setbacks. Esports is a highly competitive environment with frequent losses. The ability to learn from mistakes and adapt quickly is invaluable.

Fast Reaction Time and Reflexes: While not directly caused by ADHD, some studies suggest a correlation between certain ADHD traits and faster reaction times. In fast-paced games, this can translate to a significant competitive edge.

However, it’s crucial to understand the downsides:

- Impulsivity: This can lead to rash decisions and mistakes in game.

- Difficulty with sustained attention: Managing energy levels and maintaining focus during long tournaments is a significant challenge.

- Emotional regulation: Frustration and anger management are vital in high-pressure situations. ADHD can exacerbate these challenges.

Success with ADHD in esports hinges on effective management strategies:

- Medication: For some, medication can significantly improve focus and impulse control.

- Therapy: Cognitive Behavioral Therapy (CBT) can help develop coping mechanisms for managing impulsivity and emotional regulation.

- Routine and Structure: Creating a structured training regimen and maintaining a consistent sleep schedule are crucial for managing energy levels.

- Mindfulness and Stress Management Techniques: Techniques like meditation can aid in improving focus and reducing stress.

Ultimately, success in esports, regardless of ADHD, requires dedication, hard work, and strategic thinking. ADHD presents both challenges and opportunities; effective management is key to leveraging the advantages and mitigating the disadvantages.

What’s better: higher FPS or better graphics?

The “better graphics or higher FPS” debate is a nuanced one, often misunderstood by newcomers. While higher-resolution textures and advanced shaders undoubtedly enhance visual fidelity, prioritizing FPS is crucial for a superior gaming experience, especially in competitive scenarios. High FPS, typically exceeding 60 frames per second, translates directly to smoother gameplay, reducing motion blur and making rapid movements feel more responsive and accurate. This isn’t just about aesthetics; it’s about the tangible impact on gameplay. Lower FPS introduces noticeable input lag, the delay between your action and its on-screen reflection. This lag can be detrimental in fast-paced games, costing you crucial reaction time. Think of it this way: stunning graphics are useless if your character teleports instead of smoothly reacting to your commands. The ideal situation is a balance, of course, but at a certain point, sacrificing some graphical detail for a significant FPS boost offers a much more noticeable and beneficial improvement to overall gameplay.

Consider the impact on different genres: In a fast-paced shooter, a smooth 144Hz experience is far more advantageous than slightly enhanced shadows at 30 FPS. Strategy games might benefit more from higher resolution textures and detailed unit models, as response times aren’t as critical. Understanding the importance of FPS in relation to the demands of each game is key to optimizing your settings.

Finally, remember that the human eye has limits. While ultra-high resolution textures offer minute visual improvements beyond a certain point, the smoothness provided by higher FPS is consistently noticeable and directly affects performance. The perceptual difference between 60 FPS and 144 FPS is far more significant than the one between a slightly blurry and a razor-sharp texture. Therefore, aiming for a sufficiently high FPS should be your primary focus unless your game’s specific mechanics rely heavily on visual detail.

How many FPS can the human eye see?

The “how many FPS can the eye see?” question is a bit of a trick. The raw biological limit is closer to 1000 FPS (1kHz), but that’s under ideal lab conditions. Think of it like this: your monitor’s refresh rate is only part of the equation; the game’s actual rendering speed is another.

Practically speaking, most gamers won’t notice a difference above 100-150 FPS. Your perception of smoothness maxes out around there. Beyond that, diminishing returns kick in. That’s why pushing for hundreds or thousands of FPS is often overkill unless you’re doing super-competitive esports where a few milliseconds matter, or you’re working on highly demanding tasks such as video editing.

But here’s the kicker: that 100-150 FPS sweet spot isn’t universal. Factors like your monitor’s refresh rate (60Hz, 144Hz, 240Hz, etc.), your individual visual acuity, and even the type of motion being displayed influence your perception. Faster refresh rates unlock the potential to perceive higher frame rates more accurately. Blurry, fast-paced action scenes need more frames than slow, deliberate ones for a smooth experience.

So, while your eye *can* potentially process 1000 FPS, the practical limit for smooth gaming is way lower, typically around that 100-150 range. Don’t get caught up chasing ludicrously high frame rates unless you have a specific reason, and make sure your equipment can actually sustain it.

Which game looks the most realistic?

Let’s be real, the “realistic” argument is always subjective, but The Last of Us Part 2 pushed the boundaries of what’s possible. The facial animations? Forget about it. They’re not just good, they’re unsettlingly lifelike. You can practically *feel* the characters’ internal struggles just by looking at their micro-expressions. That level of detail is usually reserved for high-budget movies, not games.

Here’s the thing though: it’s not just the faces. The whole package matters. The lighting, the environmental detail, the way clothes react – it all adds to the immersion. They clearly invested heavily in motion capture, and it shows. The movement is fluid and believable, even in the most intense action sequences.

But here’s the kicker: while technically impressive, realism isn’t everything. Some might argue that hyper-realism can sometimes detract from the gameplay experience, especially if it’s at the expense of mechanics or level design. It’s a fine line, but TLOU2 mostly managed to strike a balance.

What really sets it apart is:

- Performance Capture: Not just facial expressions, but full-body performance capture drastically enhances believability.

- Photogrammetry: They likely used this heavily to create hyper-realistic environments and character models, adding to the overall fidelity.

- High-end Rendering: The lighting, shadows, and overall visual effects create a sense of depth and realism unseen in many other games.

Ultimately, The Last of Us Part 2 represents a significant leap forward in graphical fidelity and cinematic storytelling in gaming. While other games might boast impressive visuals in specific areas, the sheer level of consistency across all aspects of the game’s presentation is what places it at the top for me in terms of realism. The level of detail is just insane.

Is 60 frames per second good?

60 FPS is totally solid, but it’s the baseline for competitive gaming. A higher FPS, meaning shorter frame times, results in smoother gameplay and a significant competitive edge. You’ll react faster, your aim will be more precise, and you’ll have less input lag.

Why is this important?

- Reduced Input Lag: The lower the frame time, the less delay between your actions and what’s displayed on screen. This is crucial in fast-paced games.

- Improved Responsiveness: Higher FPS allows for quicker reactions, giving you a crucial advantage in close-quarters combat or intense moments.

- Smoother Motion: The difference between 60 FPS and, say, 144 FPS or even higher, is noticeable, especially in fast-moving scenes. Smoother visuals mean less motion blur and clearer target tracking.

Going beyond 60 FPS:

- 144Hz monitors are becoming increasingly common, offering significantly improved smoothness compared to 60Hz. Aiming for 144 FPS or higher on a 144Hz monitor is ideal for a competitive edge.

- Higher refresh rate monitors (240Hz, 360Hz) offer even greater smoothness, but require a powerful PC to consistently maintain high FPS. The difference becomes less noticeable for most players beyond 144 FPS though.

- Remember that your monitor’s refresh rate also matters. If your monitor only refreshes at 60Hz, pushing for more than 60 FPS won’t visually improve your experience.

Is 80% CPU usage normal?

80% CPU usage? That’s a red flag, not a death sentence, but definitely a situation demanding investigation. Think of your CPU as a tireless worker; 80% sustained load is like making it work overtime *constantly*. It’ll eventually start showing signs of burnout. Performance dips, lag, freezing – these are the symptoms.

Why is high CPU usage bad?

- Reduced Responsiveness: Your system will feel sluggish, applications will load slowly, and multitasking becomes a nightmare.

- Increased Heat and Wear: High sustained loads generate more heat, potentially damaging components over time. Think of it like constantly redlining your car – eventually, something’s going to break.

- System Instability: Prolonged high CPU usage can lead to crashes, freezes, and data loss. This is a serious threat to your data and workflow.

Troubleshooting High CPU Usage:

- Identify the Culprit: Use your Task Manager (Windows) or Activity Monitor (macOS) to pinpoint the processes consuming the most resources. Look for unexpectedly high usage from applications you’re not actively using. Sometimes, a rogue program silently hogs resources.

- Close Unnecessary Programs: Close background apps, browser tabs, and anything you’re not actively using. This is a quick win, often resolving minor instances of high usage.

- Check for Malware: Malicious software is a notorious CPU hog. Run a full system scan with your antivirus software.

- Update Drivers: Outdated drivers can lead to inefficient resource management. Check for updates to your graphics drivers and other critical components.

- Consider Hardware Upgrades: If you’ve ruled out software issues, upgrading your CPU, RAM, or even your storage can drastically improve performance. A higher clock speed or more cores can dramatically improve multitasking.

- Reinstall Operating System (Last Resort): If all else fails, a clean reinstall of your OS can resolve deep-seated issues. This is a significant undertaking, so only proceed if you’ve exhausted all other options and backed up your important data.

Remember: Occasional spikes in CPU usage are normal, but consistent high usage (80% or above for prolonged periods) requires attention. Addressing the root cause will keep your system running smoothly and prevent potential damage.