The statement that only monitor resolution and refresh rate affect in-game FPS is a massive oversimplification. While resolution (the number of pixels, like 1920×1080’s 2,073,600) directly impacts the processing load on your GPU, refresh rate (Hz) only dictates how many frames can be displayed per second, not how many are generated. A high refresh rate monitor won’t magically boost a low FPS game.

Many other factors significantly influence in-game graphics and FPS. These include:

GPU Power: The graphics processing unit is the core component determining rendering capabilities. A more powerful GPU handles higher resolutions, more complex textures, and advanced visual effects with greater ease, resulting in higher FPS and better visual fidelity.

CPU Performance: While often overlooked, the CPU plays a crucial role, especially in CPU-bound games. A weak CPU can bottleneck the GPU, limiting its potential and resulting in lower FPS.

Game Engine and Optimization: The game engine itself dictates performance. Well-optimized games run smoother than poorly optimized ones, even on identical hardware.

Graphics Settings: In-game settings like texture quality, shadow detail, anti-aliasing, and ambient occlusion have a massive impact on performance. Lowering these settings reduces the processing load, leading to higher FPS.

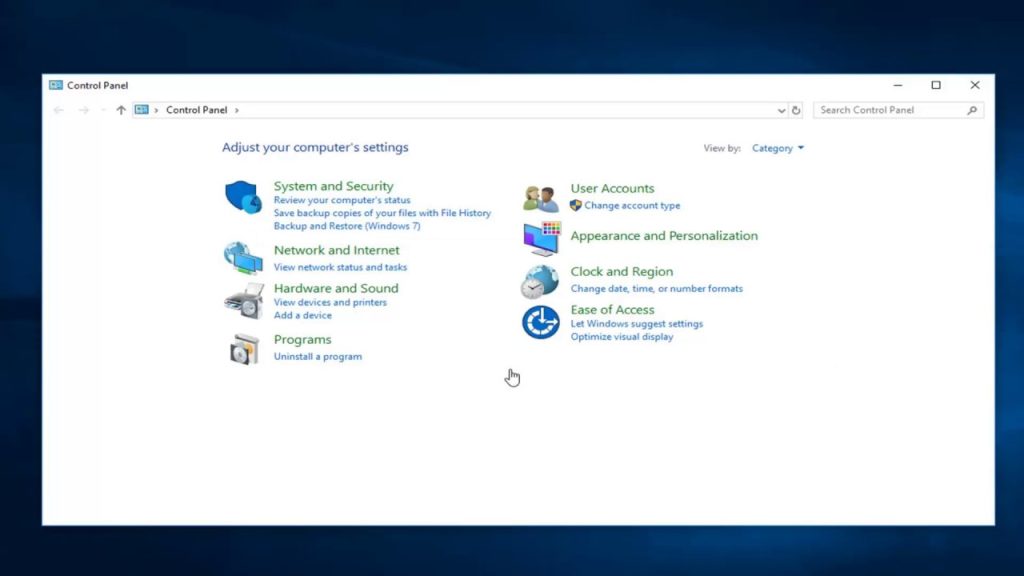

Drivers: Outdated or improperly installed graphics drivers can significantly impact performance. Always ensure you have the latest drivers installed.

RAM: Sufficient RAM is crucial for smooth gameplay. Insufficient RAM can lead to stuttering and performance issues.

Storage: Using an SSD instead of a traditional HDD can reduce loading times, although it doesn’t directly affect FPS during gameplay.

Therefore, achieving optimal in-game graphics and FPS requires a holistic approach, considering all these interconnected components, not just monitor specs.

Does the depth of field look better?

Depth of field is like a cheat code in photography. It’s not just about making the background blurry; it’s about strategic control. Think of it as manipulating the player’s focus in your visual game. Mastering it is crucial; it’s the difference between a decent shot and a truly captivating one, the difference between a level cleared and a boss defeated.

A shallow depth of field – that super-blurry background – is your power-up for isolating subjects, making them pop, creating a cinematic feel. It’s perfect for portraits, emphasizing the model, while the background fades into a pleasing wash of color. This is your go-to strategy for many situations.

Conversely, a deep depth of field – everything in sharp focus – is your strategic defense. Think landscapes, where you need the viewer to appreciate every detail from the foreground to the distant mountains. This is your “area-of-effect” spell, ensuring nothing crucial goes unnoticed.

Don’t just randomly apply it, though. Experiment and understand the rules before you break them. Aperture, focal length, and distance to the subject are your key stats – learn how to level them up to achieve your desired depth of field. Treat every photo as a different challenge; choose the right depth of field strategy to overcome it.

Practice is key. Think of it as grinding – the more you shoot, the better you’ll become at instinctively choosing the right depth of field to achieve your desired visual impact. You’ll develop an eye for knowing when a shallow DOF will dramatically enhance your composition, and when a deep DOF adds storytelling power. Eventually you’ll be able to master every boss battle.

Is depth of field necessary in games?

Depth of field in games? Dude, it’s all about simulating real-world vision. Think about it – your eye doesn’t focus on everything at once, right? DOF mimics that, blurring the background and foreground to emphasize what’s important. It’s a crucial tool for directing player focus, guiding them through the environment and highlighting key objects or characters. It’s not just a pretty effect; it’s a powerful storytelling and gameplay mechanic.

Now, there’s a lot of nuance here. You can have shallow DOF, where only a tiny area is in sharp focus – great for cinematic moments and emphasizing a single subject. Or you can have a deeper DOF, keeping more of the scene in focus. The choice depends on the game’s style and what the developers want to communicate. A fast-paced shooter might use shallow DOF sparingly, focusing on immediate threats, while a narrative-driven adventure might use it more liberally to create atmosphere and direct your attention to subtle details.

And get this – DOF isn’t just about realism. It can also be used stylistically. Think about how some games use a constantly blurry background to emphasize the speed and chaos of the action. Or how others employ it to create a dreamy or surreal atmosphere. It’s a flexible tool that skilled developers can use in many creative ways.

Finally, performance is a big consideration. DOF effects, especially high-quality ones, can be computationally expensive. That’s why you see different implementations, from simple blur effects to more sophisticated techniques like bokeh. The level of detail directly impacts the game’s performance, so developers need to strike a balance between visual fidelity and smooth gameplay.

What’s better, FPS or graphics?

Bro, FPS over graphics any day. Higher FPS means smoother gameplay, pure and simple. It’s not just about pretty pictures; it’s about reaction time. That extra smoothness gives you a competitive edge, letting you react faster to enemy movements and make more precise shots.

Think of it like this:

- Lower FPS (e.g., 30fps): Choppy movement, delayed reactions, harder to track targets accurately. You’re fighting the game’s limitations as much as your opponents.

- Higher FPS (e.g., 144+fps): Fluid, responsive gameplay. You see everything crisply, enabling better aim, faster decision-making, and ultimately, more wins. This is the difference between seeing a flashbang and *reacting* to a flashbang.

Graphics are important, sure, but they’re secondary to performance. You can always dial down some settings to boost your FPS without sacrificing too much visual fidelity. Prioritizing consistent, high FPS is crucial for any competitive player.

Here’s the breakdown:

- Competitive Edge: Higher FPS translates directly to better performance and reaction time.

- Reduced Input Lag: Less delay between your actions and what’s displayed on screen.

- Smoother Aim: Tracking targets becomes significantly easier.

- Better Awareness: You’ll notice subtle enemy movements much faster.

Maximize your FPS, then optimize your graphics. That’s the winning formula.

What’s better, FPS or graphics?

The age-old debate: FPS vs. Graphics. It’s not a simple either/or situation, fellow adventurers. FPS, or frames per second, dictates the fluidity of your in-game experience. Think of it as the engine of your gaming machine. A higher FPS, say 60 or 120, means smoother animations, less screen tearing, and a more responsive gameplay experience; vital for competitive titles and immersion alike. A low FPS, on the other hand, results in a jerky, laggy mess that can ruin even the most visually stunning game.

While visually impressive graphics enhance immersion, they’re ultimately secondary to a smooth frame rate. High-resolution textures and complex shaders look amazing, but are useless if the game stutters and lags, hindering your ability to react effectively. Think of graphics as the artistry of the scene, while FPS is the foundation that supports it. A masterpiece painting on a crumbling wall isn’t going to be enjoyable. Aim for a balance: strive for a high FPS while tweaking your graphical settings to achieve a visually pleasing experience that your system can handle consistently. Experiment with settings like shadows, anti-aliasing, and texture quality – each has a different impact on performance. Don’t chase the highest graphical settings if it tanks your FPS; a smoother experience at slightly lower visual fidelity is a much better gaming experience.

What are the drawbacks of DLSS?

DLSS’s primary drawback is its exclusivity to NVIDIA GPUs. This proprietary nature, while contributing to its widespread adoption due to NVIDIA’s market dominance and strong developer relationships, inherently limits accessibility for users with AMD or Intel graphics cards. This exclusivity creates a significant barrier to entry for a portion of the esports community, potentially impacting competitive parity and hindering wider adoption of enhanced visuals in esports broadcasts and streaming.

Furthermore, while DLSS offers impressive performance boosts, the quality of upscaling can sometimes be inconsistent, particularly at lower resolutions or with complex scenes. This inconsistency can be more noticeable in fast-paced esports titles, potentially impacting gameplay decisions dependent on precise visual information. The trade-off between performance and image quality remains a crucial consideration for competitive players seeking optimal balance.

Finally, the reliance on specific game integration presents a potential challenge. While DLSS support is growing rapidly, not all titles incorporate the technology, leaving users with supported hardware potentially without access to the performance benefits in specific games. This fragmented implementation can create inconsistencies across the esports landscape, further complicating matters for players and tournament organizers alike.

Is 500 frames per second overkill?

500 frames per second? Nah, that’s overkill. Seriously, there’s a sweet spot, and it’s way below that. Experts on human visual perception – and trust me, I’ve spent years studying how our eyes work to get that perfect pixel-perfect experience – agree that we see diminishing returns above 60fps. Your brain simply can’t process information that fast, no matter how hardcore a gamer you are. It’s like having a 1000Hz monitor with a 60Hz CPU; you’re bottlenecking the whole system. Above 60fps, you’re paying for something you’re not actually getting – smoother motion, yes, but at a point of increasingly smaller gains. Think of it like upgrading from a decent graphics card to a super-duper ultra-max graphics card – there’s a point where the gains are negligible. So yeah, 500fps? A massive waste of resources. Stick with a solid 120 or 144Hz monitor if you want some serious advantage in competitive games. Anything more is just showing off, and frankly, that’s not a good reason to upgrade. It’s about optimization, people. Remember that.

Now, I’ve played through thousands of games, and let me tell you, the difference between 60fps and 120fps is noticeable. The difference between 120fps and 500fps? Barely perceptible. You’re far better off spending that money on a better CPU, GPU, or even a superior monitor with better response times. These factors significantly impact gameplay experience, particularly reaction time and clarity, much more than just cranking up the frames past a practical threshold.

Is 19 frames per second bad?

19 FPS? Dude, that’s unplayable garbage. Seriously, for anything beyond a point-and-click adventure, you’re looking at a minimum of 30 FPS. Anything less introduces noticeable input lag and makes precise movements impossible.

Competitive gaming? Forget about it at 19 FPS. We’re talking about the difference between winning and losing. For shooters, racers, fighting games – heck, even MOBAs – you need at least 60 FPS, ideally higher. This is where you get that buttery-smooth gameplay, crucial for reaction time and accuracy. Think about the difference between reacting to a flick shot in a split second and… well, getting headshotted because your game stuttered.

Here’s the breakdown:

- 30 FPS: Bare minimum for most genres. Tolerable, but far from ideal.

- 60 FPS: The sweet spot for competitive gaming. Smooth gameplay, minimal input lag.

- 120+ FPS: Pro-level performance. Noticeable difference in responsiveness, particularly in high-action games. High refresh rate monitors are essential to fully utilize this.

- Above 240 FPS: Diminishing returns for most. Unless you’re a pro player with exceptional reflexes and a high refresh rate monitor, you won’t notice much difference past this.

Pro Tip: Don’t just look at average FPS. Monitor your minimum FPS. A game averaging 60 FPS but dipping to 30 during intense moments is still going to feel choppy and negatively impact your performance.

Does DLSS look worse?

Let’s be clear, noob: DLSS isn’t about “looking worse.” That’s a scrub’s take. DLSS Super Resolution and Ray Reconstruction aren’t magic; they’re smart upscaling. They take a lower-resolution image and intelligently reconstruct it to a higher resolution. The result? More frames, smoother gameplay, and less noticeable artifacts than most other upscaling techniques. Think of it as a high-level skill; it takes practice to master, but the payoff is huge. You’ll dominate the battlefield with higher framerates and a cleaner visual experience. Don’t let the haters fool you; it’s a game-changer.

The key is understanding the tradeoffs. You’re sacrificing *some* raw image detail for significantly better performance. But with proper settings and a decent GPU, the difference is often negligible. In fact, the improved fluidity often makes the slightly softer image *more* enjoyable. Mastering the in-game DLSS settings is crucial; experiment with different modes to find the perfect balance between performance and visual fidelity. This is where the real PvP advantage lies; a player with a locked 144fps thanks to DLSS has a noticeable edge over one stuttering at 60.

Think of it like this: you’re trading a few pixels for a massive increase in reaction time and smoothness. In PvP, that translates directly to wins. So ditch the outdated mentality. DLSS is a powerful tool; learn to wield it.

Is high FPS good or bad?

High FPS (Frames Per Second) means smoother gameplay. More FPS generally results in a more fluid and responsive experience, minimizing motion blur and making the game feel more natural.

While higher is often better, there’s a point of diminishing returns. The human eye can only perceive a certain level of smoothness. Most people can’t differentiate beyond 60 FPS; anything above this is often imperceptible improvement to the naked eye. 60 FPS is thus considered the sweet spot for most gamers. However, competitive gamers often strive for higher frame rates (e.g., 144Hz monitors are popular for this reason).

30 FPS is acceptable, particularly on consoles where hardware limitations sometimes necessitate lower frame rates. While noticeable less smooth than 60 FPS, it remains playable for many games.

Factors affecting FPS include your hardware (CPU, GPU, RAM), game settings (resolution, graphical details), and the game itself. Lowering graphical settings can significantly boost FPS if your hardware is struggling.

In short: Aim for 60 FPS as a minimum for optimal smoothness. Higher is better for competitive gaming or for those with high-refresh-rate monitors, but beyond 60 FPS, the gains are less substantial for casual players.

What does increasing sharpness provide?

Sharpening isn’t about magically creating detail; it’s about enhancing the contrast along edges, making in-focus areas *pop*. Think of it as sculpting light and shadow at the pixel level, not adding information. A poorly sharpened image looks artificial, like a bad Photoshop job from 2003. Mastering sharpening is about subtlety; less is often more. Avoid overdoing it—you’ll lose detail and introduce nasty halos. Smart Objects are your best friend here; they allow non-destructive editing, meaning you can tweak your sharpening later without degrading the original image quality. Experiment with different sharpening techniques (unsharp mask, high-pass filter, etc.) to find what best complements your style and image. Remember to consider the context—a sharp landscape needs a different approach than a portrait, where skin texture is crucial. Also, ensure your original image is properly exposed and developed before you even *think* about sharpening; sharpening a poorly exposed image just accentuates its flaws. Think of it like polishing a jewel; it highlights the brilliance, but you need a good jewel to begin with. Properly sharpening your images will give your work a professional edge, making your edits look like they were done by someone who’s spent years mastering their craft (aka, me).

Does depth of field exist in real life?

Yo, what’s up, gamers! So, depth of field – does it actually exist IRL? Totally! It’s not some video game gimmick. Our eyes are kinda blurry, right? We can’t see tiny details perfectly sharp. That’s the core of it.

Think of it like this: Your lens focuses on a specific point. Everything perfectly on that plane is sharp. But as you move away, things get blurry. That blurriness is depth of field in action.

It’s all about the circle of confusion. It’s basically how big a blurry spot needs to be before our eyes or the camera sensor can’t tell if it’s a point of light or a tiny circle. Smaller circles of confusion mean more depth of field – sharper images.

Here’s the breakdown of what affects it:

- Aperture: Smaller aperture (higher f-number) = bigger depth of field (more in focus). Think of it like squinting – you see more clearly, more is in focus.

- Focal length: Longer lenses (like telephotos) have shallower depth of field. Wider lenses (like wide-angle) have more depth of field.

- Focus distance: Focusing closer to your subject generally results in shallower depth of field.

- Sensor size: Larger sensors generally mean shallower depth of field because the circle of confusion is larger.

So yeah, depth of field is real, and it’s a super important thing to understand whether you’re playing a game with realistic graphics or shooting a real-life video. Mastering it will give your shots a pro look!

What’s better, graphics or FPS?

Look, 60 FPS is the baseline. Anything below that and you’re gonna feel that input lag, especially in competitive games. Think shooters, fighting games – you *need* that responsiveness. Below 60 and you’re reacting to what happened a fraction of a second ago, putting you at a massive disadvantage.

But higher FPS is better, right? Well, yeah, up to a point. Most people can’t even *see* the difference past 144Hz/FPS, and even then it’s subtle. Beyond that, it’s diminishing returns. You’re spending money on hardware for a tiny improvement.

Graphics are a different beast. They impact immersion hugely. A stunning game world can make even a slightly lower frame rate tolerable. Conversely, a muddy mess of low-res textures at 144 FPS still feels clunky.

Here’s the breakdown:

- Competitive games (shooters, fighters): Prioritize FPS. Aim for the highest you can get within your hardware limitations. Graphics are secondary.

- Single-player story-driven games: A good balance is key. A lower FPS with great graphics can be more immersive than a high FPS with poor graphics. Find a sweet spot.

- Open-world games: It often depends on the game engine and optimization. Some run well at high settings with high FPS, while others struggle. Experimentation is key.

Ultimately, it’s about finding the best compromise for *your* experience. Don’t just chase numbers. Experiment with different settings, find the balance between visual fidelity and smooth gameplay that works best for *you*. Don’t blindly follow benchmarks – use them as a guide, not a rulebook.

Is 30 FPS normal?

30 FPS is generally considered the minimum acceptable frame rate for a smooth gaming experience. While many console games target this, it’s far from ideal. You’ll notice noticeable judder and motion blur, especially in fast-paced scenes. Anything below 30 FPS becomes increasingly unpleasant to play. While playable, 30 FPS often feels sluggish compared to higher frame rates, like 60 FPS or even 120 FPS and above, which offer significantly smoother and more responsive gameplay. The difference is especially apparent in competitive games where reaction time is crucial.

Keep in mind: The perceived smoothness also depends on factors beyond just the frame rate, such as input lag and screen response time. Even at 30 FPS, a low input lag will feel more responsive than a higher frame rate with significant input lag.

Why do old games look better on CRT monitors?

Let me tell you something, kid. Those old CRTs weren’t just some dusty relics; they were *magic*. The scanlines weren’t a bug, they were a feature. Yeah, they were running at 480i, interlacing those lines – meaning they displayed half the vertical resolution, effectively doubling the scan rate of the image. This wasn’t some oversight; it was intentional to deal with the limitations of the hardware back then. The way those lines blended, the subtle blurring – that’s what gave those pixel-art masterpieces their unique character. It wasn’t just the resolution; it was the *entire image processing pipeline* inherent to the CRT that contributed to that classic look. The phosphor glow, the subtle scanline flicker – it added a dynamic, almost organic quality that flat-screen LCDs just can’t replicate, no matter how many fancy shaders you throw at it. Try running some old SNES or Genesis roms on an LCD and you’ll see what I mean; it looks sterile, lifeless. It’s like comparing a beautifully aged Cabernet Sauvignon to a mass-produced wine cooler. You’re missing the whole experience, man. The scanlines aren’t just lines, they’re part of the aesthetic. They’re woven into the fabric of gaming history.

Think about it: It wasn’t just about the hardware either. Developers designed games specifically for those limitations. The art styles, the techniques – they were tailored to the way CRTs displayed the image. The effect on sprite animation, especially in games pushing the boundaries of the hardware, was huge. Try emulating some old game and you will see that the modern emulators will not emulate all aspects of the CRT’s scanline, which affects the overall look. This is why modern high-resolution CRT emulators take an immense effort to recreate a CRT screen, so they can bring back those amazing vintage looks.