So, HDR on or off? It’s all about dynamic range. HDR is your weapon of choice when the scene’s brightness varies wildly – think bright sunlight hitting a dark interior. A single exposure just can’t capture everything; you’ll lose detail in either the highlights (blown-out whites) or the shadows (crushed blacks).

Think of it like this: HDR is about bracketing. You take multiple shots at different exposures – one properly exposed, one underexposed to preserve highlights, and one overexposed to bring out the shadows.

- Underexposed: Retains detail in bright areas that would otherwise be clipped.

- Properly Exposed: Provides a balanced base for the image.

- Overexposed: Reveals shadow details that would be lost otherwise.

Then, clever software merges these exposures, intelligently combining the best parts of each to create a single image with a far wider dynamic range than you could achieve with a single shot. This results in a richer, more lifelike image with better detail in both the highlights and shadows.

However, HDR isn’t a magic bullet. It’s best used in scenes with significant contrast. Overusing HDR can lead to unnatural-looking, overly processed images. Also, ensure your monitor supports HDR for optimal viewing. You wouldn’t want to render an HDR image onto a non-HDR display; it might look worse than a standard image.

- Assess your scene: High contrast? HDR is likely beneficial.

- Shoot bracketed exposures: Get those different exposure levels.

- Merge in post-processing: Use appropriate software – plenty of options exist, from Lightroom to Photoshop.

- Refine subtly: Don’t overdo it! Aim for realism, not a hyper-saturated look.

Does HDR really make a difference?

Look, HDR isn’t some marketing gimmick. It’s a massive upgrade. Forget screen size; the difference is night and day. The contrast ratio boost is insane – you’re talking deeper blacks, brighter whites, way more detail in both shadows and highlights. It’s not just about brighter colors; it’s about precision in color representation. That Wide Color Gamut (WCG) alongside HDR unlocks a far wider spectrum, resulting in richer, more vibrant, and more realistic colors. Think of it like this: you’re going from a standard definition stream to 4K with HDR. The visual fidelity is a complete game changer. It’s not just better; it’s a fundamentally superior image. We’re talking about a competitive edge in games – spotting enemies hidden in shadows, reacting to subtle environmental cues – HDR provides all that.

Don’t get me wrong, you need proper HDR-capable hardware to see the benefit. A low-quality HDR implementation won’t cut it. But done right? It’s a huge leap forward in image quality. Think of it as upgrading your gaming mouse – it’s a subtle difference initially, but over time the cumulative advantage is significant. HDR is that for your visuals.

How important is HDR in a TV?

HDR’s impact on gaming is significant. Higher peak brightness translates to more impactful explosions, vibrant environments, and better visibility in dark scenes – crucial for competitive titles. Wider color gamut delivers richer, more realistic visuals, enhancing immersion and detail. The improved dynamic range means you’ll see finer gradations between light and dark, revealing subtle textures and environmental details often lost in SDR. This isn’t just about pretty pictures; it directly impacts gameplay. For example, in shooters, discerning enemies in shadows is paramount, and HDR significantly improves this ability. While 4K and 8K focus on resolution (pixel count), HDR fundamentally alters the quality of those pixels, making them far more impactful. The difference isn’t subtle; it’s a qualitative leap in visual fidelity that directly benefits gameplay and immersion. Think of it as upgrading your entire palette of colors and lighting, rather than just increasing the canvas size. Consider the impact on games with realistic lighting and dynamic weather effects: HDR brings these elements to life in a way that SDR simply can’t match.

Noteworthy consideration: The effectiveness of HDR hinges heavily on the quality of the implementation in both the game and the TV. A poorly implemented HDR game will not look noticeably better than its SDR counterpart. Likewise, a low-quality HDR TV might not fully leverage the benefits. Look for TVs with wide color gamut coverage (e.g., DCI-P3 or Rec.2020) and high peak brightness (1000 nits or more) for optimal HDR performance.

Do you leave HDR on all the time?

So, HDR all the time? It’s a personal preference thing, really. Auto HDR on Xbox and PlayStation? It’s a mixed bag. Sometimes it magically upscales SDR games to look surprisingly good, sometimes… not so much. Think of it like this: it’s a gamble, but often a worthwhile one.

My take? I generally leave it on. Why? Because for the vast majority of games, the improved contrast and wider color gamut are noticeable upgrades, even if it’s not a perfect implementation. You get richer blacks, more vibrant colors – it punches up the visuals quite a bit. There are exceptions, of course – some games are better off without it, looking slightly washed out or overly saturated. Experiment!

Pro-tip: If you notice issues like overexposure or overly dark scenes, tweak your HDR settings in your console’s display settings and game settings individually. You can often fine-tune the brightness, contrast and sometimes even color saturation specifically for HDR. It takes a little calibration, but the payoff is worth it.

Another thing: Make sure your display actually supports HDR properly. Check your monitor/TV specs. It’s pointless leaving it on if your screen isn’t capable of displaying it. You won’t see any benefit, and might even degrade the image quality.

What are the disadvantages of HDR?

Think of HDR like a powerful weapon in your visual arsenal – capable of stunning detail and vibrancy. But, like any high-powered weapon, it needs careful handling. Mastering it requires understanding its quirks, which are your boss fights in the visual world.

The Major Glitches (Disadvantages):

- Ghosting: This is your dreaded ‘lag’ enemy. Fast-moving objects can leave trails or blurry duplicates, like a persistent afterimage. It’s a common issue when merging different exposures. Think of it as frame-rate stuttering, but for your image’s light. Mastering exposure bracketing and proper software is key to defeating it.

- Overprocessing: The ‘too much of a good thing’ problem. Pushing HDR too hard leads to an unnatural, overly saturated, and sometimes cartoonish look. It’s like maxing out all your character stats without considering synergy – the result is unbalanced and weak. Finding the right balance is crucial.

- Exposure Consistency (Especially in Videography): This is your toughest boss fight. Maintaining smooth, consistent exposure across video frames is incredibly challenging. Imagine trying to maintain perfect aim while moving; it’s difficult to keep your shots clean. Proper camera settings, tone mapping, and post-processing techniques are essential for victory.

Advanced Techniques (Unlockable Skills):

- Mastering Exposure Bracketing: Learn to precisely control the exposure range captured to minimize ghosting.

- Tone Mapping Mastery: This is your spell book for controlling the dynamic range and avoiding oversaturation. Experiment with different algorithms to discover the best fit for your style.

- Understanding HDR Software: Different HDR software packages offer unique tools and capabilities. Explore them to learn their strengths and weaknesses and discover which ones best suit your needs.

- Careful Post-Processing: Fine-tuning your HDR images/video is paramount. This is where you refine your work, removing imperfections, and bringing out the best details. It’s the equivalent of crafting the perfect weapon in a blacksmith’s forge.

Should I turn on HDR on my camera?

So, HDR on your camera? It’s a bit more nuanced than a simple yes or no. Your camera’s sensor, much like your own eyes, has limitations. It struggles with high dynamic range scenes – those with crazy bright highlights and deep, dark shadows. Think a sunset with a brightly lit building in the foreground.

What HDR does: It cleverly overcomes this limitation by taking multiple exposures of the same scene at different brightness levels. A dark exposure captures detail in the shadows, a bright one preserves the highlights, and they’re intelligently blended together. The result? A single image with a much wider tonal range than you’d get otherwise – richer blacks, brighter whites, and more detail throughout the entire scene.

However, there’s a catch:

- It’s computationally expensive. Processing multiple images takes time and battery power.

- It can be overly processed. Some HDR implementations can produce an artificial, almost cartoonish look, especially on scenes that don’t actually need it. Think over-saturated colors and a halo effect around bright objects.

- Motion blur is a risk. Because it takes multiple shots, any movement in the scene will likely result in ghosting or blur.

When to use it:

- Scenes with extreme contrast (bright highlights and deep shadows).

- Landscapes with a wide range of brightness.

- Architectural photography where you want to capture both interior and exterior detail.

When to avoid it:

- Fast-moving subjects.

- Scenes with already good dynamic range.

- If you prefer a more natural, less processed look.

Pro Tip: Experiment! Take a shot with HDR and one without. Compare them and see what works best for your style and the particular scene. Don’t rely on automatic HDR; learn to adjust its settings for optimal results.

Which is better, HDR or non-HDR?

The short answer is: HDR is significantly better than non-HDR, but it’s not a simple “yes” or “no”. The improvement is substantial, offering a noticeably more realistic and immersive viewing experience.

Let’s break down why:

- Wider Color Gamut: HDR displays can reproduce a far broader spectrum of colors than standard dynamic range (SDR) displays. This translates to richer, more vibrant, and more accurate colors, making images pop with life.

- Higher Brightness and Contrast: HDR boasts significantly higher peak brightness, allowing for incredibly bright highlights and inky blacks simultaneously. This results in a far greater sense of depth and detail, particularly in high-contrast scenes. Non-HDR struggles to represent both bright and dark areas effectively, leading to washed-out highlights or crushed blacks.

- Improved Detail in Highlights and Shadows: The increased dynamic range means HDR TVs preserve detail in both the brightest and darkest parts of an image. SDR often loses detail in these areas, resulting in a less nuanced and realistic picture.

However, there are caveats:

- Content is Key: HDR’s benefits only shine through with HDR-encoded content. Watching standard SDR content on an HDR TV won’t magically improve it; you’ll essentially just have a brighter picture. Look for 4K HDR content for the full impact.

- HDR Formats: Several HDR formats exist (HDR10, Dolby Vision, HLG, etc.), each with its own capabilities. Dolby Vision generally offers the best picture quality, but HDR10 is more widely supported.

- Price Point: HDR TVs tend to be more expensive than non-HDR equivalents. Weigh the cost against the visual upgrade based on your viewing habits and budget.

In essence, if you’re serious about picture quality and consume a lot of 4K HDR content, the upgrade is undeniably worth it. But if your budget is tight or you primarily watch standard definition or older content, a non-HDR TV might suffice.

Is HDR good or bad for videos?

HDR? Dude, it’s a game-changer. Forget washed-out highlights and crushed blacks; HDR delivers way more dynamic range than your eyes can even process. That means insane detail in both the bright and dark areas – think seeing the enemy’s subtle movements in the shadows, or spotting that crucial health pack glinting in the sun. No more squinting!

Benefits for Esports:

- Competitive Edge: Spot enemies easier, react faster to environmental cues.

- Immersion: The vibrant colors and insane detail make the game world feel way more real. It’s like you’re *right there* in the arena.

- Improved Gameplay: Seeing every detail boosts your situational awareness. This is massive in fast-paced games.

Think of it like this: Standard Dynamic Range (SDR) is like playing on a blurry, low-resolution monitor. HDR is upgrading to a 4K beast with insane refresh rates. You’ll notice the difference immediately – cleaner visuals, better performance, and a huge competitive edge.

HDR isn’t just a pretty picture; it’s a strategic advantage. It’s the difference between winning and losing, especially in those nail-biting clutch moments.

Does HDR use more power?

The power consumption difference between HDR and non-HDR monitors is a nuanced topic, often overblown. While HDR monitors can draw slightly more power, the increase is generally negligible for most users. This is because the power draw depends heavily on the peak brightness level.

Factors Influencing Power Consumption:

- Peak Brightness: HDR’s advantage lies in its expanded luminance range. Higher peak brightness settings demand more power. A monitor showcasing a bright HDR scene will consume more power than one displaying a dark scene, regardless of HDR capability.

- Backlight Technology: The type of backlight (e.g., edge-lit LED vs. full-array local dimming (FALD)) significantly impacts power draw. FALD, offering superior contrast, typically consumes more power due to its more complex backlight control.

- Panel Type: Different panel technologies (IPS, VA, OLED) have inherent power consumption differences. OLED, known for perfect blacks, often consumes less power than comparable IPS or VA panels, even with HDR enabled, but can have a higher power draw for bright scenes.

- Monitor Size: Larger monitors, regardless of HDR capability, generally use more power.

Practical Implications for Gamers:

- The power increase from HDR is rarely substantial enough to impact your gaming session or electricity bill significantly. Don’t let this concern overshadow the visual benefits.

- Focus on optimizing your in-game settings. Reducing brightness, disabling unnecessary visual effects, and choosing appropriate HDR settings can significantly reduce power consumption without compromising the experience too much.

- If power consumption is a major concern, consider the overall efficiency of your monitor rather than solely focusing on the HDR aspect. Look for energy efficiency ratings (e.g., Energy Star certification).

In short: While HDR might increase power consumption slightly, it’s often outweighed by the visual improvements and shouldn’t be a major deciding factor in purchasing a monitor.

Is HDR good or bad for TV?

So, HDR on your TV? It’s a massive upgrade, especially if you’re a gamer. Forget those washed-out colors of standard HDTVs – we’re talking a jump from about 17 million colors to potentially billions with HDR and Wide Color Gamut (WCG). Think of it like this: standard definition is a crayon box with like, 12 sad crayons. HDR with WCG is a massive art supply store explosion of vibrant color. It’s insane.

Here’s the real gamer benefit breakdown:

- More detail in shadows and highlights: See enemies hiding in the dark better. Spot that sniper before they get you.

- More realistic visuals: Environments look way more immersive and detailed. It’s not just pretty; it helps your gameplay.

- Better contrast: Blacks are deeper, whites are brighter, making everything pop.

- Higher dynamic range: This translates to more realistic lighting effects in games, leading to a more competitive edge. Think explosions, fire, sunsets – it all looks leagues better.

Now, there’s a bit of a catch. You need an HDR-capable game and an HDR-capable display to get the full effect. But if you’ve got both? Prepare to be blown away. It’s not just about pretty pictures; it’s about enhanced gameplay and a much more immersive experience.

Different HDR standards exist (like HDR10, Dolby Vision, HLG) – Dolby Vision generally offers the best picture quality but requires more processing power. HDR10 is more widely supported but might not look quite as good. Just make sure your TV and games support some form of HDR.

Does HDR just make things brighter?

So, HDR – is it just a brightness boost? Nah, it’s way more than that. Think of SDR as your standard definition, your trusty old VHS. HDR is like stepping up to 4K Blu-ray – a massive leap. You get significantly brighter highlights, but crucially, it’s also about the shadows. HDR expands the dynamic range, meaning you get far more detail in both the bright and dark areas. Forget those crushed blacks and blown-out whites; HDR nails the details across the entire spectrum. Plus, it usually comes with a wider color gamut (WCG), so colors are far more vibrant and realistic. It’s not just a brighter image; it’s a richer, more nuanced one.

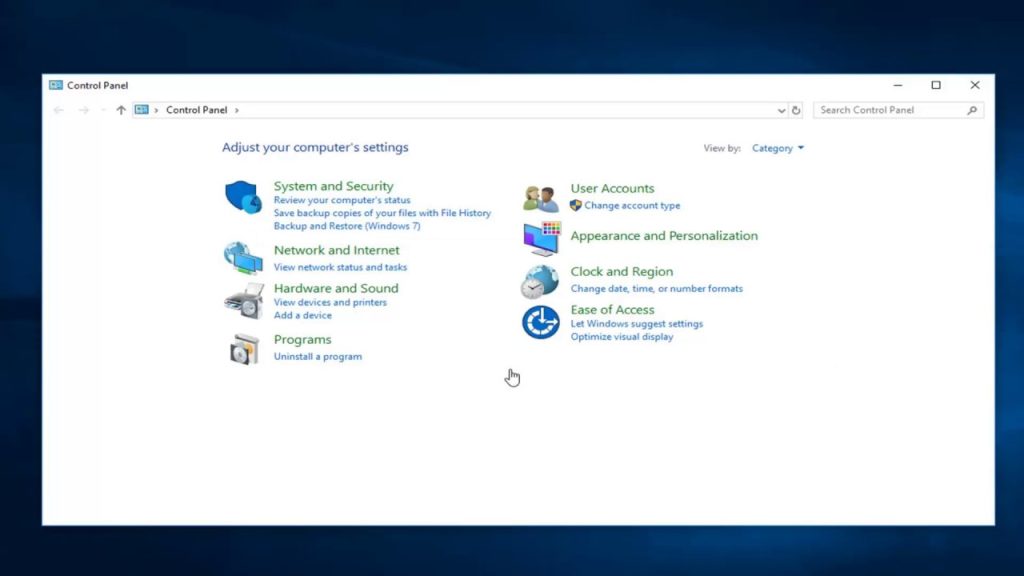

On Windows 10, if you’ve got an HDR-capable monitor and a system that supports it, you’ll unlock this enhanced visual experience. There’s a steadily growing library of HDR games and apps in the Microsoft Store, so you’ve got plenty to explore. Just make sure your hardware and software are all set up correctly to enjoy the full HDR experience – it’s worth the setup; the difference is night and day. Trust me, I’ve seen thousands of sunsets in countless games, and HDR makes them truly pop.

Does HDR improve picture quality?

HDR, or High Dynamic Range, isn’t just a buzzword; it’s a fundamental shift in how we experience image fidelity. Forget washed-out highlights and crushed blacks – HDR unlocks a vastly expanded range of luminance, allowing for a far greater dynamic range than standard dynamic range (SDR) content. This translates to breathtaking realism. Think of it like this: SDR is a limited palette, while HDR is a full spectrum of colors and brightness. You’ll see details in the darkest shadows and the brightest highlights that were previously lost to compression or the limitations of the display technology. A sunlit landscape will feature vibrant, crisp highlights on the sunlit foliage without losing the rich detail in the shadows of the trees. Similarly, a dimly lit scene will reveal subtle facial expressions and textures that previously appeared flat or muddy. This dramatic increase in detail and contrast elevates the viewing experience from “good” to “immersive”. The difference isn’t subtle; it’s transformative. It’s the difference between seeing a photograph and being *present* in the scene.

This enhanced detail isn’t just about pretty pictures; it dramatically improves scene readability. In action scenes, you’ll discern details that inform the narrative, like the glint of metal on a weapon or the fine dust kicked up by a speeding vehicle. The heightened contrast allows for a more accurate depiction of depth and distance, adding to the overall sense of presence and immersion. The key takeaway? HDR isn’t an upgrade; it’s a whole new level of visual storytelling.

Furthermore, HDR’s impact extends beyond just brightness and contrast. Wide color gamuts, often used in conjunction with HDR, significantly expand the available color palette, resulting in more vibrant and lifelike colors. This combination of increased luminance, contrast, and color accuracy delivers an unparalleled level of visual fidelity, far exceeding the capabilities of traditional SDR displays.

Should I get HDR on TV or not?

The HDR vs. SDR debate is crucial for competitive gaming. While SDR provides a decent picture, HDR significantly enhances visual fidelity, offering a competitive edge. This isn’t just about “prettier” graphics; it’s about improved gameplay.

Key Advantages of HDR in Esports:

- Expanded Color Gamut and Brightness: HDR displays a far wider range of colors and brighter highlights than SDR. In fast-paced games, this means subtle details—like enemy camouflages or environmental cues—become much clearer, providing a crucial advantage in spotting opponents and reacting quickly.

- Improved Contrast: HDR’s superior contrast ratio enhances visibility in both bright and dark areas. This is especially valuable in games with complex lighting conditions, allowing for quicker identification of targets even in shadow.

- More Detail in Highlights and Shadows: HDR preserves detail in extremely bright and dark sections of the image. This is critical in discerning opponents hiding in shadows or identifying subtle changes in lighting that could signal an approaching enemy.

- More Immersive Experience: The increased realism provided by HDR can lead to improved focus and reduced eye strain during extended gameplay sessions, crucial for maintaining peak performance in competitive scenarios.

Practical Considerations:

- HDR Content Availability: Ensure your games and streaming services support HDR. Not all titles offer HDR, limiting its benefits in some instances.

- Hardware Requirements: A compatible HDR display, graphics card, and HDMI cable (HDMI 2.0 or higher) are essential for HDR functionality.

- Calibration: Proper HDR calibration is crucial to optimize picture quality and avoid washed-out or overly saturated colors. A poorly calibrated HDR setup can actually hinder performance.

In short: While the initial investment in HDR-capable equipment is higher, the performance benefits in competitive gaming, due to improved visual clarity and detail, make it a worthwhile upgrade for serious esports athletes.

Does HDR drain the battery?

Yeah, HDR’s a battery hog. That extra processing power needed to render those vibrant colors and wider dynamic range really takes a toll. Think of it like cranking your graphics settings to ultra – it looks amazing, but the juice is gonna drain fast. Expect significantly shorter battery life when gaming or watching HDR content on battery power. Manufacturers often disable HDR by default on battery to mitigate this; it’s a power-saving measure, not a bug.

Pro-tip: If you’re a battery-conscious gamer, consider lowering your in-game HDR settings, or even disabling it altogether, when unplugged. You can often find HDR settings within your game’s graphics options or in your operating system’s display settings. You’ll still get a great visual experience with less impact on your battery. Finding that sweet spot between visual fidelity and battery life is key to extended playtime on the go.

Another thing to consider: The brightness level plays a huge role. A brighter HDR display will naturally consume more power. Keep your brightness at a reasonable level to maximize battery life.

When to use HDR mode?

Yo, what’s up, gamers? So, HDR mode, right? It’s like this crazy cheat code for your camera. Think of it this way: your camera’s got a limited vision, it can only see a certain range of brightness – kinda like how your monitor can only display a certain range of colors. But the real world? It’s way brighter and darker, with way more detail than your camera can normally grab in a single shot.

HDR is where you stack multiple shots – one super dark, one perfectly exposed, and one super bright – then the camera or software blends them together. Boom! You get this insane level of detail, the shadows have definition, the highlights aren’t blown out, it looks way more realistic, almost like you’re actually *there* witnessing the scene. It’s like unlocking a higher graphic setting in your game, but for real life.

Now, when do you use it? Think high-contrast scenes: sunsets, sunrises, bright cityscapes with dark alleys, anything with huge differences in brightness. It really shines in those situations. But, don’t go crazy, it’s not a magic bullet. Sometimes it can look a little artificial if overused, and it does take more time and processing power since you’re taking multiple exposures. Think of it as a powerful tool, use it wisely!

Do smart TVs have HDR?

Look, 4K and 8K TVs? HDR’s practically a given. You’re talking vibrant colors and contrast so deep, it’s like stepping into the game. UHD resolution just adds to the insane detail; it’s a visual feast. Think of it as maxing out your graphics settings – but permanently.

Samsung’s 2025 models? They’ve got this Auto HDR Remastering thing. It’s a cheat code for your eyeballs. Takes regular content and magically boosts it to HDR. It’s not quite the same as native HDR, but it’s a damn good upscaler, adding that extra pop to even old games or movies. Expect a noticeable difference, especially with darker scenes; details previously lost in the shadows suddenly appear. This is crucial for competitive gaming where spotting enemies is paramount.

Basically, if you’re serious about visuals, HDR is non-negotiable. It’s not just a fancy feature; it’s a game changer. Don’t even think about settling for anything less. Your immersion hinges on it.

Does HDR make videos blurry?

The assertion that HDR makes videos blurry is inaccurate. Blurriness in Instagram videos, or any video platform, is rarely caused directly by HDR itself. HDR (High Dynamic Range) affects the color and contrast range, not the sharpness or resolution. Dull, blurry, or unplayable videos on Instagram are more likely due to several other factors:

- Incorrect Encoding/Compression: Instagram’s compression algorithms can sometimes negatively impact video quality, particularly when dealing with high-resolution or high-bitrate source material. Improperly configured encoding settings during the export process significantly contribute to blurriness and artifacts.

- Low Resolution Source: The original video file may have a low resolution, leading to blurring regardless of HDR. Uploading a low-resolution video and then attempting to apply HDR will not magically enhance the detail.

- HDR Metadata Issues: While unlikely to directly cause blur, metadata inconsistencies in the HDR file can lead to playback problems on certain devices or platforms. Instagram’s support for HDR metadata may be a limiting factor.

- Platform Limitations: Instagram’s processing and delivery infrastructure might not fully support the specific HDR format used, causing playback issues or quality degradation. Different devices and platforms handle HDR differently, leading to inconsistencies in the final output.

- Network Issues: Poor internet connection during upload or playback can result in buffering, pixelation, and the perceived blurriness of the video.

Troubleshooting Steps:

- Ensure your source video is of high enough resolution.

- Re-encode your video with optimized settings for Instagram, potentially using lower bitrates for smoother uploads. Experiment with different codecs.

- Check for HDR metadata compatibility. Some software can modify or remove HDR metadata.

- Test uploading the video to other platforms to isolate if the problem is specific to Instagram.

In short, blame the encoding, the source material, or the platform, not the HDR itself. HDR is a feature intended to enhance visual quality, not diminish it.

What is the difference between HDR and normal brightness?

The core difference between HDR (High Dynamic Range) and standard brightness, or SDR (Standard Dynamic Range), boils down to luminance—the perceived brightness of light. SDR displays typically max out around 100-300 nits (a nit is a unit of luminance). This is perfectly fine for most viewing situations, but it severely limits the visual impact. Imagine a sunlit scene: SDR struggles to represent the extreme brightness of the sun alongside the darker shadows simultaneously. The result is often a washed-out image lacking detail in both highlights and shadows.

HDR, however, dramatically expands this range. Peak brightness can reach 10,000 nits or even higher in some professional setups, though consumer displays are generally closer to 1000 nits. This allows for a far greater contrast ratio – the difference between the brightest white and the darkest black. What this means for you is a more realistic and visually stunning image, with far more detail in the brightest highlights (like that sun) and the deepest shadows. Think vibrant colors that pop, and subtle gradations previously invisible on an SDR screen.

It’s not just about peak brightness though; HDR also encompasses a wider color gamut (the range of colors a display can produce). This leads to richer, more saturated colors that better approximate real-world colors, making the viewing experience significantly more immersive.

However, HDR isn’t a simple upgrade. It requires both HDR-capable hardware (display, source device) and HDR-mastered content. Simply upscaling SDR content to HDR won’t magically provide those benefits; it’s the mastering process that’s crucial for achieving the full HDR effect. Without correctly mastered content, you might only see a slightly brighter image, not the dramatic improvement HDR is capable of.

Should auto HDR be on?

Auto HDR? It’s a crapshoot, honestly. Some games benefit immensely, popping with vibrancy and detail that SDR simply can’t match. Think of it as a quick and dirty way to boost the visual fidelity – sometimes it’s a godsend, especially on older titles. But other times, it washes things out, oversaturates colors, or introduces weird halos around bright objects. It really depends on the game’s engine and how it handles tone mapping.

My advice? Experiment. Keep Auto HDR on for a few hours, then toggle it off and play the same section. Compare directly. Pay close attention to how it affects shadow detail, highlights, and overall color balance. If it looks better with it off, then it’s off. Simple as that. Don’t let anyone tell you there’s a single right answer. Your eyes are the ultimate judge.

Pro-tip: If you’re experiencing issues like flickering or weird color banding with Auto HDR, try adjusting your monitor’s settings. Things like brightness, contrast, and even the backlight can drastically impact how Auto HDR renders the image. It’s often not the HDR itself, but the interaction between the game and your display.

Ultimately, it’s a personal preference. I generally keep it on because the upsides often outweigh the downsides for me, but your mileage may vary. Don’t be afraid to tweak!

Is HDR necessary for 4K?

Alright folks, so you’re asking if HDR is a must-have for 4K? Think of it like this: 4K is the resolution, the raw number of pixels. It’s like having a super high-res map, incredibly detailed, but completely flat. HDR, on the other hand, is the terrain, the vibrant colors, the deep shadows, the bright highlights – it gives that map depth and realism.

You *can* play the game (watch 4K content) on a standard screen, you’ll still see the crisp 4K resolution, it’ll just look…flat. Like a 2D painting compared to a 3D cinematic masterpiece. You’re missing out on the incredible dynamic range, the expanded color space – think of it as the difference between a dull, washed-out palette and a vibrant, intensely detailed one. HDR is like unlocking all the graphical settings in a game – it pushes the visual fidelity to the absolute maximum.

Without HDR, you’re playing on ‘easy mode’ visually speaking. You’re not experiencing the full potential of that 4K resolution. It’s like having a supercar, but never taking it above 40mph. So yeah, it’s technically 4K, but it ain’t the full 4K experience.

Think of it like this: HDR is the secret sauce. It’s what takes that 4K resolution and transforms it from a high-definition image into something truly breathtaking. You can play the game without it, but you’ll be missing out on some seriously awesome visual fidelity.