Alright viewers, let’s talk picture quality. Forget the auto settings; they’re usually garbage. You’ve gotta dive into those expert settings to *really* unlock your TV’s potential. Think of it as fine-tuning a race car – small adjustments make a HUGE difference.

First, find your TV’s settings menu. Usually, it’s a cogwheel or gear icon. Then, look for “Picture,” “Image,” or something similar.

Next, locate “Expert Settings” or “Advanced Settings.” This is where the magic happens. Here’s the breakdown:

- Brightness: Don’t max it out! Aim for a comfortable level where you can see detail in both dark and bright scenes. Use a test pattern (easily found online) for best results.

- Contrast: This controls the difference between black and white. Too high, and you lose detail in shadows. Too low, and everything looks washed out. Again, test patterns are your friends.

- Sharpness: A common mistake is cranking this up to 11. High sharpness creates halos and artificial detail, making the picture look grainy and unnatural. A subtle increase might be needed, but less is often more.

- Color: Adjust this based on your preference and the content. Most TVs benefit from a slight reduction in saturation for a more realistic look. Experiment with different color temperature settings (usually “Warm,” “Neutral,” or “Cool”) to find your sweet spot.

Beyond the basics:

- Backlight (if applicable): Controls the overall brightness of the screen. Adjust this based on your room’s ambient light.

- Local Dimming (if applicable): Improves contrast by adjusting brightness in different zones of the screen. Experiment with different settings, but be aware that aggressive local dimming can sometimes cause some “haloing” around bright objects on a dark background.

- Motion Smoothing/Interpolation (often called “Soap Opera Effect”): This reduces motion blur, but can make the picture look unnatural and overly smooth. Turn it off unless you really like that look.

- Picture Modes: Experiment with different pre-sets like “Movie,” “Game,” or “Vivid” to see what works best with your content. Remember that these are often starting points – you’ll likely need to further tweak the individual settings within each mode.

Pro-tip: Calibrate your TV using a professional calibration tool or a detailed online guide for your specific TV model. This will provide the most accurate and optimized image.

How do I get the perfect picture on my TV?

Getting that perfect picture on your TV isn’t about magic; it’s about understanding your set’s capabilities and tweaking its settings. Forget the “sharper is better” myth. Over-sharpening introduces artifacts, making the image look artificial and grainy. Subtlety is key. Reduce the sharpness setting until the image appears clean and natural, avoiding halos around objects.

Motion smoothing (often called soap opera effect) is another culprit. It creates that unnatural, jerky, video-game-like smoothness that many find distracting. Disable it completely for a more cinematic and film-like experience, especially if you’re watching movies. The benefits vastly outweigh the artificial smoothness.

Vivid mode is a marketing gimmick. It blasts colours and contrast, resulting in a washed-out, unrealistic look. Opt for a more neutral setting like “Movie” or “Cinema” mode. Then, gradually reduce brightness until you achieve optimal detail in both dark and light areas, without crushing blacks or blowing out highlights. You want depth and richness, not a flat image.

Contrast needs careful calibration. Too high, and you lose detail in both shadows and highlights. Too low, and the image lacks pop. The ideal setting varies by content and room lighting. Experiment, and you’ll find the sweet spot that reveals all the nuance in the picture.

Finally, choose the correct picture mode based on your content. Most TVs offer presets for movies, games, sports, etc. Each mode is optimized for specific content, and switching accordingly will improve your viewing experience. For gaming, look for a mode with low input lag to minimize response times. For movies, a cinematic mode will enhance the depth and realism.

What is the resolution of HD set top box?

Tata Play’s HD set-top boxes offer a 1080i resolution, which is a great step up from standard definition. While not true 1080p, 1080i still delivers a significantly improved picture quality with sharper details and better color representation than SD. It’s important to note the “i” signifies interlaced scanning, meaning the image is built line by line in two passes. This can sometimes lead to a slight judder, especially on fast-moving scenes, compared to the progressive scan of 1080p. However, for most viewers, the difference is negligible, especially on larger screens.

The inclusion of Dolby Digital audio is a significant plus, providing a much more immersive and cinematic sound experience than standard stereo. This is especially beneficial when watching action movies or sporting events. The difference in audio quality is often more noticeable than the subtle differences between 1080i and 1080p video.

Key Considerations:

- Your TV’s Capabilities: Ensure your television supports 1080i input. Most modern HD TVs will, but it’s always good to check your TV’s specifications.

- HDCP Compliance: Make sure your set-top box and TV are both HDCP compliant. This ensures that copyrighted HD content can be displayed correctly. Older devices might lack this, resulting in no picture or poor video quality.

- Signal Quality: The actual quality you experience will depend on the quality of the signal from your provider. Weak signals can affect both picture and sound quality regardless of the set-top box’s capabilities.

- Alternative Resolutions: While 1080i is the advertised resolution, the box may output lower resolutions depending on the source material or your TV settings. This is a common characteristic.

In short: 1080i with Dolby Digital provides a solid HD viewing experience, though a true 1080p would be even better. The HD recording feature is also a nice addition for those who like to keep their favorite shows.

What is the difference between 1080i and 1080p set top box?

While both 1080p and 1080i boast a 1920×1080 resolution, the crucial difference lies in how they deliver that resolution: scanning methodology. This seemingly minor detail significantly impacts image quality and the overall viewing experience.

1080p (Progressive Scan): This is the superior format. Think of it as painting a picture line by line, from top to bottom, completing each line before moving to the next. Each frame is a complete picture, resulting in a sharper, more detailed image with less motion blur, especially in fast-paced scenes. This is because each frame is a fully formed image.

1080i (Interlaced Scan): Imagine weaving a tapestry. 1080i weaves the image, displaying only *odd* lines of the frame initially (field 1), then filling in the gaps with the *even* lines (field 2) to form a full frame. While the final resolution is still 1080 lines, each field is only half the vertical resolution. This interlacing process, while efficient for broadcast transmission, can lead to noticeable artifacts like “jaggies” or combing during fast movements, particularly on screens that don’t handle de-interlacing well. It also usually broadcasts at 60 fields per second (which are half-frames), equating to 30 frames per second (fps) of complete imagery.

- Key Differences Summarized:

- Scanning Method: 1080p uses progressive scan (full frames), 1080i uses interlaced scan (fields).

- Image Clarity: 1080p generally offers superior clarity and sharpness due to the complete frames.

- Motion Blur: 1080p exhibits less motion blur.

- Artifacts: 1080i is more prone to interlacing artifacts (combing).

- Frame Rate: While both might be *advertised* as 60fps, 1080i effectively delivers 30fps of complete images.

In short: If you want the best picture quality, always opt for 1080p. 1080i is a legacy format and, while functional, will often appear less sharp and smoother than 1080p.

What display mode is best for performance?

Forget smooth visuals, kid. FPS mode is where it’s at. That 30% input lag reduction? It’s the difference between a headshot and a head-scratcher. We’re talking milliseconds, the blink of an eye in a firefight, but those milliseconds decide who wins. Think of it like this: less lag means your aim translates to the screen *instantly*. No more frustrating missed shots because the game’s a beat behind. That BenQ you mentioned? A solid choice, the 0.5ms response time is insane. Ghosting? Blurring? Nonexistent. You’ll see every pixel-perfect detail, every enemy movement, crystal clear. But here’s the kicker: that speed comes at a cost. Expect some flickering. Your eyes will adjust, though. And you’ll be owning noobs before they even see you coming. This isn’t for casual players. This is for people who play to *win*. The ultimate advantage. Master it.

Oh, and don’t think just slapping on FPS mode is enough. You need a monitor with a high refresh rate to fully utilize it, 144Hz minimum, ideally higher. Anything less, and you’re bottlenecking the whole system. And yeah, you need a rig that can handle it. Low FPS in FPS mode is an oxymoron – counterproductive as hell. Get that framerate up. Then you’ll understand.

What picture mode is best for TV shows?

For the best TV show viewing experience, Filmmaker Mode is your starting point. It provides a highly accurate representation of the director’s intent, prioritizing color accuracy and detail. However, it’s optimized for darker room viewing. If you’re watching in a brighter room, you’ll likely need to increase the brightness slightly to maintain visibility. Don’t be afraid to experiment! Fine-tuning brightness and contrast is key to optimal picture quality tailored to *your* environment. Consider also adjusting the sharpness setting; slightly reducing it can often minimize unnatural halos and improve overall clarity. Remember, Filmmaker Mode typically disables any unnecessary processing like motion smoothing (soap opera effect), resulting in a more natural and cinematic look.

While Filmmaker Mode is a great baseline, understanding your TV’s specific picture settings gives you total control. Look into options like color temperature (try “warm” for a more comfortable experience), and explore advanced settings if your TV allows for more granular adjustments of things like gamma and black level. Small changes can make a big difference. Experimenting with these parameters can lead to an image that’s perfectly optimized for your preference and viewing conditions.

Should I set my cable box to 1080i or 720p?

Choosing between 1080i and 720p on your cable box depends heavily on your TV and your priorities. If “Native” resolution isn’t available, 1080i is generally the better option, despite what some might say.

While most TVs are 1080p, 1080i and 1080p share the same horizontal resolution. The difference lies in the way the image is scanned: 1080p is progressive (each line is scanned sequentially), while 1080i is interlaced (odd and even lines are scanned separately). This means 1080i can sometimes appear slightly less sharp, especially on fast-moving scenes. However, this is often negligible.

- 1080i Advantages:

- Preserves native resolution for 1080i channels: No upscaling is needed for channels broadcasting in 1080i, resulting in a cleaner signal.

- Generally better for less powerful cable boxes: Upscaling 720p to 1080p puts more strain on a lower-end box, potentially leading to artifacts or lag.

- 1080i Disadvantages:

- 720p channels are upconverted: Your cable box will upscale 720p content to 1080i, which might introduce some processing artifacts, especially on older or lower-quality boxes. The quality of this upscaling can vary significantly depending on the cable box’s processing capabilities.

- Interlacing artifacts: The interlaced nature of 1080i can lead to noticeable combing effects in fast-moving scenes, particularly on larger screens, although modern TVs do a good job of de-interlacing.

720p, on the other hand, will always be upscaled to either 720p or 1080p (depending on your TV), so you are always relying on your TV or box’s upscaling. This can be better on some modern TVs which offer superior upscaling capabilities. Consider the upscaling capabilities of both your cable box and television when making your decision. If you have a high-end TV known for excellent upscaling, 720p might be a viable option for less strenuous processing.

What is the best resolution for Full HD?

Full HD, or FHD, is the 1920 x 1080 pixel resolution – a standard that dominated displays for years. While it’s been largely surpassed by 4K and beyond, understanding its nuances remains crucial. That 2.07 megapixel count offered a significant jump in clarity over its predecessors, resulting in crisp visuals ideal for gaming (at least at the time). The “1080” refers to the vertical resolution, while “i” and “p” denote the scanning method: 1080i uses interlaced scanning (less common now), displaying half the lines at a time, while 1080p uses progressive scanning, displaying all lines simultaneously – resulting in a smoother, less flickery image, superior for gaming.

For gaming, the difference between 1080p and other resolutions becomes more apparent at higher frame rates. While 1080p offered a sweet spot balancing visual fidelity and performance on older hardware, modern high-refresh-rate displays (144Hz, 240Hz and beyond) at 1080p still shine. The reduced pixel count means less demanding rendering for the GPU, allowing for higher frame rates and smoother gameplay, a crucial advantage in competitive titles. However, the lower pixel density compared to 4K or even 1440p means finer details will be less sharp. Essentially, 1080p in gaming presents a trade-off: excellent performance versus potentially sacrificing visual detail for a smoother experience. The optimal choice depends greatly on your hardware and gaming preferences.

How do I know if I have 1080i or 1080p?

Yo, so you wanna know if you’re rocking 1080i or 1080p? Check your display settings, noob. It’s all about how the image gets drawn to your screen.

1080p is progressive scan. Think of it like this: it draws every single line, one after the other, in a single pass. Smooth, clean, buttery visuals. This is what you want for competitive gaming. Less ghosting, less input lag – pure, unadulterated framerate dominance.

1080i is interlaced scan. It’s like drawing every other line, then going back and filling in the gaps. Two fields, each showing half the lines. Sounds efficient, right? Wrong. It introduces motion blur and that annoying “flicker” that’ll make your aim shaky AF. Back in the day, it was sorta ok for broadcast TV but it’s garbage for gaming.

Here’s the breakdown of why 1080p reigns supreme:

- Sharper Image: No more blurry lines or artifacts. Crystal clear vision for those crucial headshots.

- Smoother Motion: Say goodbye to that interlaced scan judder. It’s all about that silky smooth 60fps (or higher!).

- Lower Input Lag: Less delay between your actions and what’s happening on screen. Critical for competitive edge.

Basically, if you’re serious about gaming, 1080i is a major handicap. Get that 1080p locked in and dominate the leaderboard.

How do I change my resolution to Full HD?

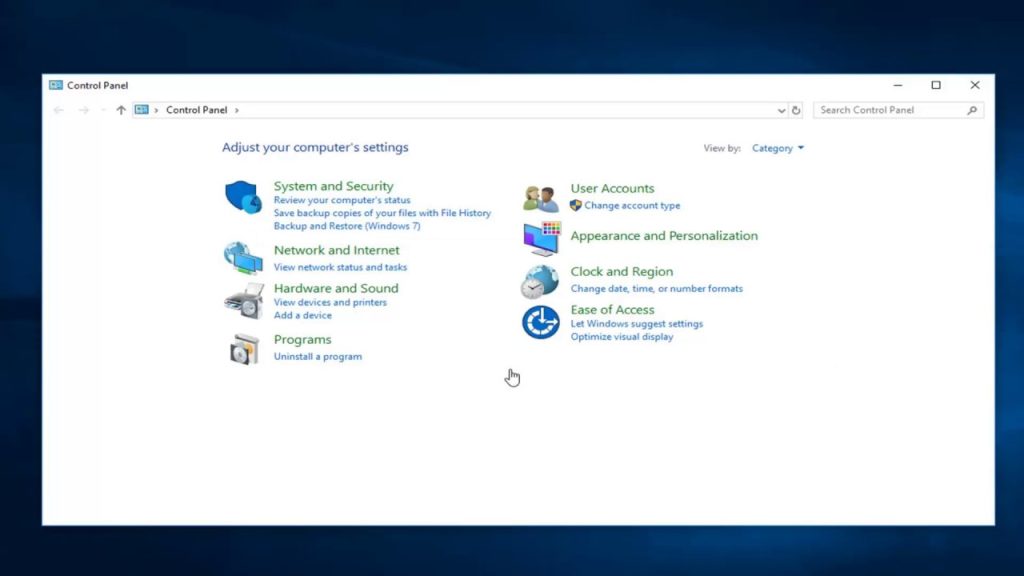

Altering your display resolution to Full HD (1920×1080) is a straightforward process, but optimizing it for gaming requires a nuanced approach. The basic steps remain the same: navigate to your display settings (usually found in your operating system’s control panel or system preferences), locate the “Display resolution” or equivalent setting, and select “1920 x 1080”. However, simply choosing the resolution isn’t enough for optimal gameplay.

Understanding the Implications:

- Performance Impact: Higher resolutions demand more processing power from your GPU. Running Full HD at high settings might lead to lower frame rates (FPS) than lower resolutions. Experiment with graphical settings to find the sweet spot between visual fidelity and performance.

- Scaling and Aspect Ratio: Ensure your aspect ratio is correctly set (typically 16:9 for Full HD). Incorrect settings can result in stretched or distorted images. The “Recommended” setting usually optimizes for your monitor’s native resolution, but exceptions exist.

- Refresh Rate: This dictates how many times your monitor updates per second, influencing smoothness. Aim for the highest refresh rate your monitor and GPU support at 1920×1080 for a smoother gaming experience. This is often found within the same display settings menu.

Advanced Considerations:

- GPU Drivers: Ensure your graphics card drivers are up-to-date. Outdated drivers can lead to compatibility issues and performance bottlenecks.

- In-Game Settings: Many games offer their own resolution settings. These settings should match your system’s display resolution. Furthermore, adjust in-game graphics settings (textures, shadows, anti-aliasing) to balance visual quality with performance.

- Monitor Capabilities: Your monitor’s specifications determine the maximum resolution and refresh rate it can handle. Exceeding these limits is impossible.

Troubleshooting: If you experience issues after changing your resolution, such as screen tearing or flickering, try updating your graphics drivers or reverting to a previously working resolution. Sometimes, restarting your computer is also necessary.

How can I get a better picture on my TV?

Yo, what’s up gamers? So your TV picture sucks? Let’s fix that. Forget that “sharper is better” nonsense. Turning down sharpness actually reduces digital artifacts and makes things look smoother, especially important for gaming. Over-sharpening creates halos around objects – nasty! Think natural, not hyper-realistic.

Next, ditch Motion Smoothing or any soap opera effect. It introduces that weird, unnatural look, especially noticeable in fast-paced games. You want the cinematic feel, the film grain – it adds depth. Leave that motion interpolation crap off.

Vivid mode? Please. That’s for showing off your TV in a brightly lit Best Buy. Kill the brightness – it’s not about blasting your eyes out, it’s about detail. Lower it until blacks are black and details pop. Adjust contrast subtly to balance brightness – you want that sweet spot.

Picture modes are crucial. Game mode, if available, is your best friend. It lowers input lag significantly, which is HUGE for responsive gameplay. If you’re watching movies, try “Movie” or “Cinema” mode – they prioritize accurate color representation. Experiment!

Pro-tip: Calibrate your TV! There are tons of resources online – websites and YouTube videos – that guide you through adjusting RGB levels and other settings for optimal picture quality. It’s a bit of a time investment, but the results are worth it. For gaming, getting a proper calibration is even more important than just tweaking individual settings.

And finally, check your connections. Are you using the best possible cable (HDMI 2.1 for 4K/120fps gaming)? Dust those ports! A clean connection ensures a crisp signal. A faulty cable can cause all kinds of picture issues.

How do I get the best resolution on my TV?

Getting the best resolution on your TV isn’t just about selecting the highest number; it’s about optimizing the entire picture pipeline. While selecting your TV’s native resolution (often 1080p or 4K) in your source device’s settings is crucial, many other factors affect perceived image quality.

First, ensure your source (game console, streaming box, Blu-ray player) is actually outputting at the native resolution. Many devices default to lower resolutions to conserve bandwidth or power. Check its video settings meticulously. Consider using a high-speed HDMI cable (HDMI 2.1 for 4K@120Hz) to guarantee a clean signal. Cheap cables can introduce noticeable artifacts or signal loss.

Beyond resolution, sharpness is key. While your TV has a sharpness setting, overdoing it can introduce “halos” around objects. Experiment for optimal results. Noise reduction is another critical setting – often labeled as “Digital Noise Reduction” or similar. Too much can soften the image, but a moderate setting can significantly reduce graininess, especially noticeable in darker scenes or with older game titles.

The blue light filter, while helpful for eye strain at night, can significantly wash out colors. Adjust its intensity carefully. Motion smoothing (often called “Soap Opera Effect” by gamers) should generally be avoided. While it can reduce motion blur, it gives games an unnatural, cinematic look that many find distracting. Many modern TVs offer several different motion interpolation settings; experimenting can help find a balance.

Using different apps can lead to varied picture quality. Some apps may downscale or over-process video. External interference, such as other electronics close to your TV, can also cause image degradation. Try moving your Wi-Fi router or other devices further away. Switching HDMI ports can sometimes resolve connection issues or improve signal stability.

Finally, if all else fails, a factory reset of your TV might resolve unexpected software glitches affecting image processing. Remember to back up your TV’s settings first if possible.

Should my TV be on 1080i or 1080p?

Alright guys, so you’re asking about 1080i vs. 1080p? Let’s break it down. 1080i, that’s interlaced. Think of it like flipping pages really fast – you get half the picture, then the other half, incredibly quickly. 1080p, on the other hand, is progressive. It shows *all* the lines at once, a complete picture in each frame. Now, your eye might not *notice* the difference in static scenes, or slow-paced stuff. But when things get hectic – fast-paced action scenes, sports, anything with rapid movement – that’s where 1080p shines. The interlacing in 1080i can create a noticeable judder or a shimmering effect, especially on larger screens. It’s like the difference between a smooth, buttery video and one that looks a little… choppy. This is why you’ll almost never find new TVs that support 1080i; it’s an older technology. If your TV *can* do 1080p, always go for it. You’ll get a much clearer and smoother image, especially in demanding scenes. Think of it as the difference between a well-oiled machine and one that’s constantly skipping gears.

What is the best setting for image quality?

Optimizing in-game image quality is crucial for competitive advantage. Forget “best” – it’s about finding the *optimal* balance for *your* system and game. The provided settings are a decent starting point, but need context.

Drive Mode: Single shot prioritizes image clarity for stills; continuous is essential for capturing fleeting moments of action, especially in fast-paced games. Consider burst mode for maximum capture rate.

Noise Reduction: Turning off both long exposure and high ISO noise reduction is generally correct for minimizing processing latency which can affect reaction time. This is a trade-off; you might see increased noise, especially in low-light scenarios. Experiment to find your acceptable noise threshold.

Color Space: sRGB is a widely compatible standard, perfect for sharing. Consider Adobe RGB for wider color gamut if your workflow supports it and color accuracy is paramount (though this generally offers negligible benefit in competitive gameplay).

Image Stabilization: Essential for handheld shooting to mitigate blur; disable when using a tripod, as it can introduce unwanted corrections. In-game, consider the game’s built-in anti-aliasing and motion blur settings as your primary stabilization tools.

HDR/DRO: Often detrimental in competitive scenarios. HDR can increase processing time and DRO (dynamic range optimization) might wash out crucial details – especially critical for recognizing opponents in complex environments. Keep these off unless you’re confident the performance impact is minimal.

Beyond the Basics: Frame rate, resolution, and shadow detail settings are even more crucial than image quality settings. Prioritize high frame rates for smoother gameplay and faster reaction times, potentially sacrificing resolution if necessary. Experiment with in-game graphics settings, not just camera settings.

Remember: What works optimally is highly dependent on your hardware, the specific game, and your personal preferences. Consistent benchmarking and experimentation are key to finding your perfect setup.

Which photo mode is best?

Let’s cut the crap. Aperture Priority (Av or A) is your go-to for most situations. Variable lighting? Av handles it like a seasoned pro. It lets you dictate depth of field – that blurry background you crave – while the camera manages the shutter speed. Master this, and you’ll be a photography ninja.

Manual (M) mode? Think of it as the endgame. It’s for situations where you’re the master puppeteer of light, like a perfectly controlled studio. Need precise exposure consistency across a series of shots? Manual’s your weapon of choice. It’s brutally effective, but demands a deep understanding of the exposure triangle.

Here’s the breakdown of why M is crucial for your advancement:

- Understanding the Exposure Triangle: Mastering M forces you to internalize the relationship between aperture (f-stop), shutter speed, and ISO. This isn’t some textbook knowledge; it’s muscle memory, crucial for adapting to any lighting scenario.

- Predictable Results: In M mode, you’re in complete control. Every setting is *your* choice. This allows for incredibly consistent results, vital for professional work or when reproducing a specific aesthetic.

- Pushing Creative Boundaries: Want that ultra-shallow depth of field with a long exposure? Or a perfectly sharp image in low light? M mode gives you the power to achieve effects impossible in automated modes. It’s where the real photographic artistry resides.

Think of it this way: Av is your trusty sidekick for everyday adventures. M is the ultimate boss battle – conquer it, and you’ll truly dominate the photographic landscape.

Which is better picture 1080i or 1080p?

Alright folks, let’s settle this 1080i vs. 1080p debate once and for all. The short answer is 1080p is better, almost always.

1080i, or interlaced, displays the image in two fields. Think of it like flipping through pages really fast – you get half the image at a time. 1080p, or progressive, displays all the lines simultaneously. This makes a huge difference, especially with fast-moving action.

While your eye might not *always* notice the difference in static scenes, the moment things start to move – panning shots, fast-paced games, sports – 1080i can suffer from something called interlace artifacts. These look like jagged lines or a shimmering effect, particularly noticeable around edges of objects in motion.

Here’s the breakdown:

- 1080p (Progressive): Sharper, cleaner image, especially in motion. Less artifacting. Smoother viewing experience.

- 1080i (Interlaced): Can appear slightly softer. Prone to motion blur and artifacts. Often used in older broadcasting standards.

So, unless you’re stuck with older equipment, always prioritize 1080p. The difference is subtle sometimes, but when it’s there, you’ll definitely notice it, especially if you’re a gamer or you enjoy watching action-packed content. It’s a superior standard for a reason.

Which is better Full HD or 1080p?

Yo, what’s up, fam? So, Full HD and 1080p? Same thing, different names. We’re talking 1920 x 1080 pixels – that’s your standard high-definition resolution. Think of it as the baseline for crisp gameplay.

Now, 4K (3840 x 2160) is a whole different beast. That’s over 8 million pixels, meaning way more detail and a sharper, smoother picture. The difference is HUGE, especially if you’re rocking a big screen or streaming at high bitrates. Think of it like going from standard definition to Blu-ray.

Higher resolution means better image quality, but it also means more demanding on your system. You need a beefier GPU and more bandwidth to run 4K smoothly. If you’re aiming for the absolute best visual experience, especially for competitive gaming where those extra pixels can make a difference spotting enemies, then 4K is the way to go. But remember, that extra clarity comes with a higher price tag for both the monitor and the hardware needed to drive it.

Ultimately, the “better” resolution depends on your budget, hardware capabilities, and what you’re trying to achieve. For most people, 1080p still delivers fantastic visuals, especially at a more affordable price point.

Which picture format is best quality?

So, you’re asking about the best quality picture format? TIFF is king, hands down, especially if you’re aiming for high-res prints – think museum-quality stuff, art photography, that sort of thing. It’s lossless, meaning no image data is thrown away during compression, so you retain insane detail. Think scanning precious artwork? TIFF’s your go-to. But, and this is a big BUT, the file sizes are monstrous. We’re talking gigabytes, sometimes even tens of gigabytes for a single image. Sharing them? Forget about it unless you have a dedicated server and a whole lot of patience. It’s a professional format, not one for casual sharing on social media. If you need smaller files for web or general sharing, consider JPEG, which sacrifices some quality for much smaller file sizes. PNG is a solid alternative for images with sharp lines and text, great for logos and illustrations. Basically, choose your format based on your needs: TIFF for maximum quality, but be prepared for massive files; JPEG for everyday use and web sharing; and PNG for graphics and text.